Advanced analytics platform

Technical Description

I. Introduction

The Advanced Analytics Service is a state-of-the-art data processing and storage solution designed to handle intricate analytics pipelines, manage file storage across multiple cloud platforms, and provide a robust API for client applications. This project exemplifies advanced Python programming skills, deep expertise with FastAPI, proficiency in both SQL and NoSQL databases, and a comprehensive understanding of cloud technologies and data engineering principles.

II. Architecture Overview

Built on FastAPI, a modern and high-performance web framework for Python, the service leverages asynchronous programming to maximize efficiency and scalability. Integration with both SQL databases (using SQLAlchemy ORM) and MongoDB showcases versatility in handling diverse database technologies.

Asynchronous processing is a cornerstone of the architecture, extending from API handlers to database operations and interactions with external services. This design ensures the system can efficiently manage a high volume of concurrent requests without compromising performance.

A key feature is seamless cloud integration with both AWS and Google Cloud Platform (GCP) for file storage. This multi-cloud approach demonstrates the ability to design cloud-agnostic solutions, providing flexibility and resilience by not being tied to a single cloud provider.

III. Key Components

A. API Endpoints and Controllers

The service exposes a comprehensive set of well-structured API endpoints for report generation, file storage management, and utility functions. Controllers like `report_controller.py` and `storage_controller.py` adhere to RESTful API design principles and maintain a clean separation of concerns, enhancing maintainability and scalability.

B. Data Pipeline Processing

At the core of the analytics functionality lies an advanced pipeline processing system (`pipelines/` directory). This system showcases the ability to design and implement complex data processing workflows capable of handling various data transformations, anomaly detection, and feature extraction, crucial for delivering insightful analytics.

C. Storage Management

The storage module (`storage_controller.py`, `storage_service.py`) provides a unified interface for file operations across different cloud providers. This abstraction layer simplifies storage interactions and demonstrates proficiency in designing scalable, provider-agnostic cloud solutions.

D. Authentication and Authorization

Implementing OpenID Connect authentication, the service ensures secure interactions between client applications and the API. This reflects a strong understanding of modern security protocols and best practices in API security.

E. Logging and Monitoring

Integration of custom logging middleware and monitoring tools like Prometheus underscores a commitment to observability and reliability. This facilitates proactive system monitoring and rapid troubleshooting, essential for maintaining high service uptime.

IV. Technical Challenges and Solutions

A. Scalability and Performance Optimization

- Asynchronous Programming: Utilized FastAPI and `asyncio` to handle I/O-bound operations efficiently, ensuring the service remains responsive under high load.

- Database Connection Pooling: Implemented pooling mechanisms to optimize database connections, reducing latency and resource consumption.

- Caching Strategies: Employed caching to store frequently accessed data, minimizing database load and accelerating response times.

B. Data Integrity and Error Handling

- Custom Exception Handling: Developed centralized error handling with custom exceptions to provide clear and consistent error responses.

- Comprehensive Logging: Established detailed logging for all operations, enhancing traceability and simplifying debugging.

- Transaction Management: Ensured data consistency through meticulous transaction management in database operations.

C. Cloud Provider Abstraction

- Unified Storage Interface: Designed an abstraction layer that allows seamless interaction with different cloud storage services without altering the core application logic.

- Provider-Specific Adapters: Implemented adapters like `GoogleCloudStorage` and `AWSCloudStorage` to handle the unique features and APIs of each cloud provider.

D. Asynchronous Processing of Complex Analytics

- Flexible Pipeline System: Engineered a modular pipeline system capable of asynchronous processing, accommodating various analytical tasks.

- Advanced Data Analysis Techniques: Integrated algorithms for anomaly detection and time series analysis, showcasing sophisticated data engineering capabilities.

V. DevOps and CI/CD

The service incorporates advanced CI/CD pipelines using GitHub Actions for building and deploying Docker containers to AWS ECR. This demonstrates expertise in containerization and automated deployment processes. Deployment with ArgoCD to Kubernetes clusters reflects proficiency in GitOps principles and scalable infrastructure management.

VI. Code Quality and Best Practices

A. Type Hinting and Pydantic Models

- Employed extensive type hinting and Pydantic models for data validation and serialization, enhancing code clarity and reducing runtime errors.

B. Error Handling and Custom Exceptions

- Implemented a robust error-handling framework with custom exceptions, ensuring that errors are caught and managed gracefully, improving user experience.

C. Configuration Management

- Utilized environment variables and configuration files to manage different deployment environments securely and efficiently, following best practices in configuration management.

D. Unit Testing

- Developed comprehensive unit tests, emphasizing a test-driven development approach to ensure code reliability and facilitate continuous integration.

VII. Conclusion

The Advanced Analytics Service is a testament to high-level technical proficiency across several domains:

Python Expertise: Advanced programming skills, including asynchronous programming patterns and extensive use of type hinting.

API Development: Mastery of FastAPI for building high-performance, scalable APIs.

Database Proficiency: Skilled in integrating and managing both SQL and NoSQL databases, ensuring efficient and reliable data storage solutions.

Data Engineering: Ability to design and implement complex data pipelines and analytics processes.

Cloud Computing: In-depth knowledge of AWS and GCP services, with the ability to create cloud-agnostic solutions.

DevOps Skills: Experience with Docker, CI/CD pipelines, and Kubernetes deployments, demonstrating an understanding of modern software delivery practices.

Best Practices: Commitment to code quality, maintainability, and reliability through proper error handling, testing, and adherence to design principles.

The architecture is designed for scalability and extensibility, making it well-suited to handle increasing data volumes and evolving analytical needs. This project not only highlights technical capabilities but also showcases the ability to deliver robust, high-performance solutions in complex environments.

By combining advanced technical skills with practical experience in building scalable, efficient, and secure applications, this project serves as a compelling demonstration of readiness for senior roles in Python development, SQL database management, FastAPI application development, and data engineering.

Like this project

Posted Sep 23, 2024

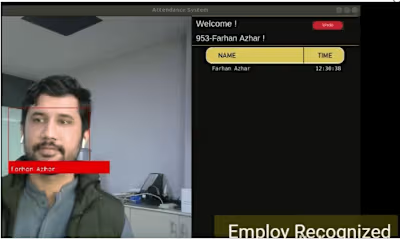

The Advanced Analytics plarformis a custom configuration based state-of-the-art data processing platform

Likes

0

Views

9

Featured on