Development of a custom ETL solution

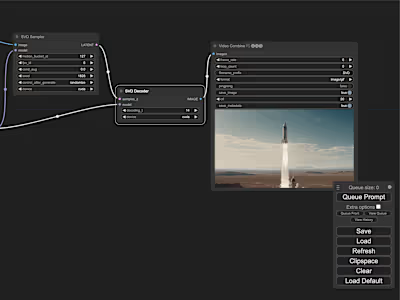

Development and deployment of a full-stack ETL and scheduling application that allows adding and processing any API endpoint with ease.

Periodically extracts data using cron and places it in a data lake or warehouse of your choice.

Currently in use by clients who wanted to integrate data from all their operational platforms in a single place, removing data silos and allowing a more comprehensive analysis across different platforms.

Written as a parser in python that turns a single JSON specification into a sequence that interacts with API endpoints that yield either complex data structures or require dynamic handling of variables at runtime; a functionality that was missing from more UI-driven solutions like MS Data Factory, while maintaining scalability and reusability.

Deployed on a virtual machine using Docker, with a (private) multi-user front-end in NextJS to manage, log, test and monitor all scheduled jobs, with a backend written in python + PostgreSQL.

Like this project

Posted Mar 21, 2024

Successfully led the development and deployment of a custom ETL solution that can handle complex data structures and extract data from any API.