Augmented Reality Agent: Proxemics

The Spark 🎇

I had always been fascinated by the subtle, unspoken language of personal space. How we instinctively adjust our proximity to others, stepping closer in familiar company or backing away when a stranger gets too near. It’s something we do without thinking, yet it shapes every social interaction.

Then, I wondered: what if Augmented Reality (AR) agents could understand and adapt to these same unwritten rules? Could virtual beings respect personal space just like humans do? That was the beginning of my journey into AR Agent Proxemics.

scroll to the end to see the video demo

The Conflict

Most AR experiences feel artificial because the digital agents don’t behave in a way that’s socially intuitive. Imagine a virtual assistant appearing uncomfortably close or an AR guide standing awkwardly far away, it immediately breaks immersion.

Another major challenge was grounding AR agents in physical space. Augmented Reality objects often float or shift unexpectedly due to inconsistent spatial mapping. Without proper anchoring, an AR agent wouldn’t feel like part of the real world.

To make this work, I needed to solve two critical challenges:

How can AR agents interact with users in a natural way, respecting human personal space?

How can they stay properly positioned and grounded in real-world environments without floating or feeling disconnected?

The Journey

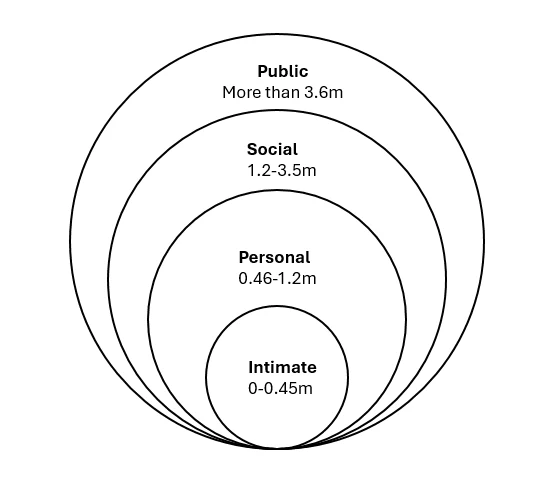

The journey began with human research, understanding how we instinctively adjust our proximity based on different social contexts. I explored Edward T. Hall’s proxemics theory, which defines four primary interaction zones:

Intimate (0–0.45m) – Reserved for close personal interactions.

Personal (0.46–1.2m) – Everyday conversation distance.

Social (1.3–3.6m) – General engagement space.

Public (>3.6m) – Large-scale presentations or performances.

proxemic zones (in meters)

Using Unity and MRTK, I built an adaptive system that allowed AR agents to sense the user's presence and adjust their position accordingly. The system analysed:

User Distance & Movement – Did the user step closer or back away?

Adaptive Positioning – Could the agent reposition smoothly without feeling robotic?

The next major hurdle was grounding the AR agent. Many AR objects struggle to stay in place due to unstable spatial mapping. To fix this, I implemented:

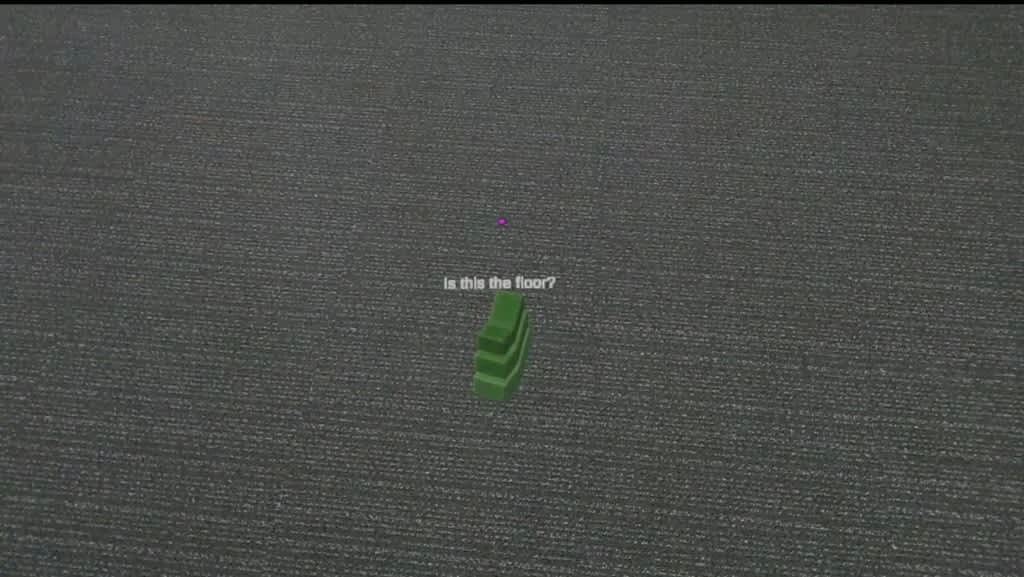

Real-time floor detection using spatial mapping.

NavMesh anchoring, ensuring smooth movement within boundaries.

Raycasting techniques to keep the agent grounded on a stable, detected surface.

Real-Time Adaptation and Behaviour Control

To ensure a fluid and natural experience, I implemented a real-time adaptation system where the AR agent continuously monitored user movement and recalculated its positioning dynamically.

Programmed real-time distance detection – The agent continuously measured proximity and adjusted its stance within milliseconds.

Defined boundary detection logic – If the user moved beyond or within the set limits, the agent recalculated and repositioned accordingly.

Additionally, the agent was designed to maintain eye contact and dynamically adjust its head and body alignment based on user movement, making interactions feel more personal and engaging.

Advanced Animation and Behaviour Control

One of the key aspects that brought realism to the agent was ensuring that its body language and movement were lifelike. Instead of feeling robotic, the agent needed to display natural reactions to users’ movements.

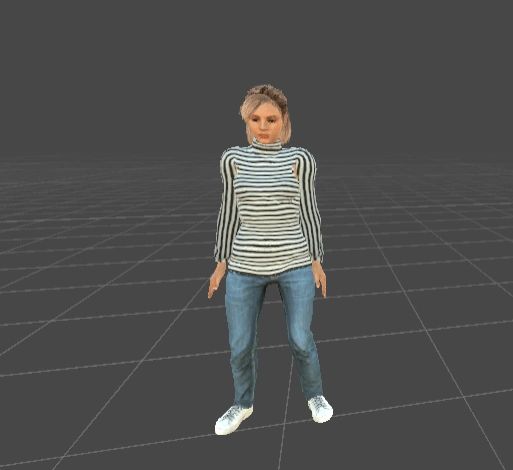

Developed a fully rigged 3D humanoid agent using Unity, Animancer, and Inverse Kinematics (IK) for lifelike movements and interactions.

image of the AR Agent

Implemented a Finite State Machine (FSM) to handle state transitions (idle, walking, turning) based on user proximity, ensuring smooth movement adjustments without abrupt shifts.

For example, when the agent needed to turn towards a user, rather than instantly snapping its head, it smoothly transitioned between states:

This approach ensured that each interaction felt intentional and immersive, reducing the typical awkwardness found in many AR applications.

Grounding and Navigation

To create a sense of stability and realism, I tackled the issue of floating AR agents by implementing precise grounding mechanisms:

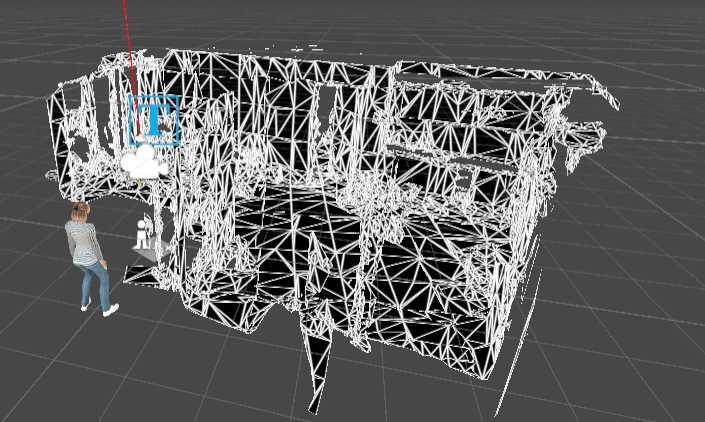

Fragmented spatial mesh detection – Used raycasting to dynamically detect and align with real-world floors via HoloLens spatial mapping.

fragmented spatial meshes generated

NavMesh anchoring – Pre-baked a NavMesh on the detected floor, ensuring the agent always stayed grounded

A prompt popping up after a spatial mesh was detected

A* algorithm for efficient pathfinding – Enabled the agent to move smoothly within defined boundaries, preventing erratic behaviour.

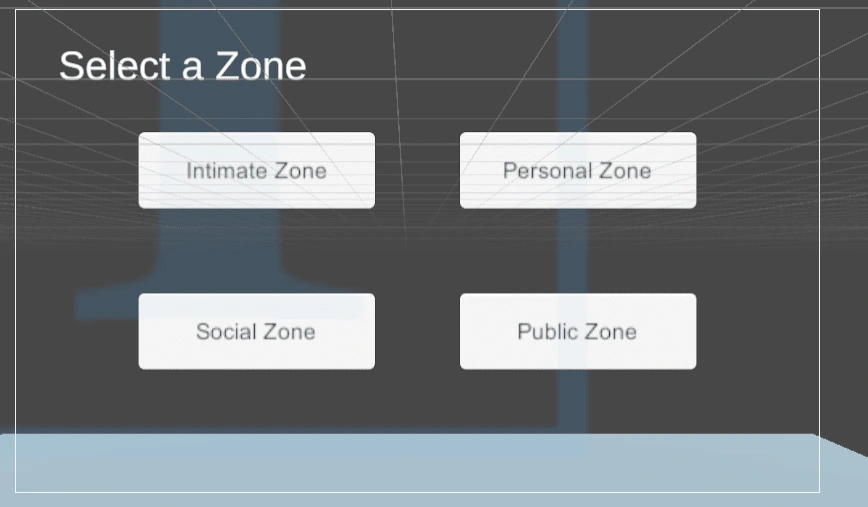

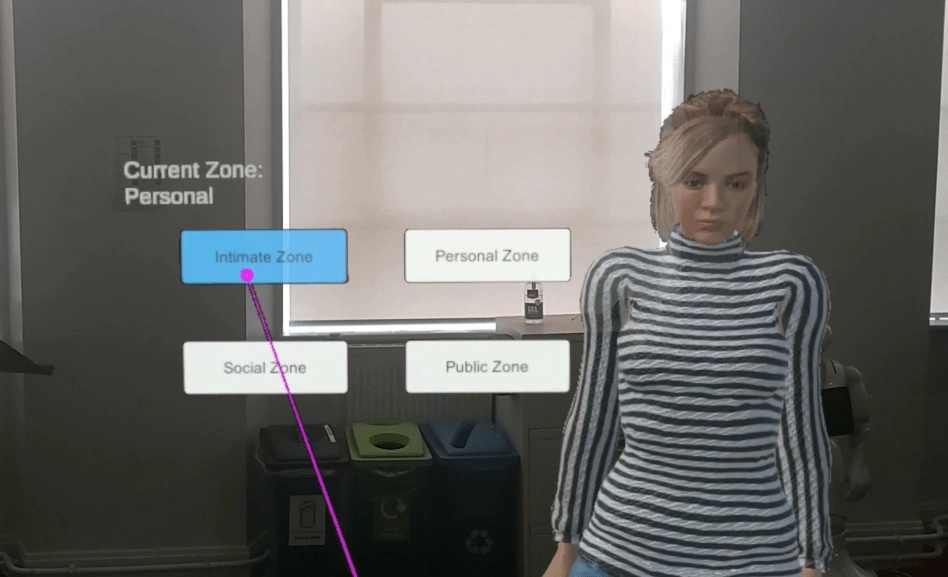

User Interface (UI) for Customisation

To make the system more accessible, I designed an intuitive UI allowing users to personalize their experience:

Customizable Proxemic Zones – Users could adjust interaction distances dynamically.

Menu buttons

user interacting with the interface

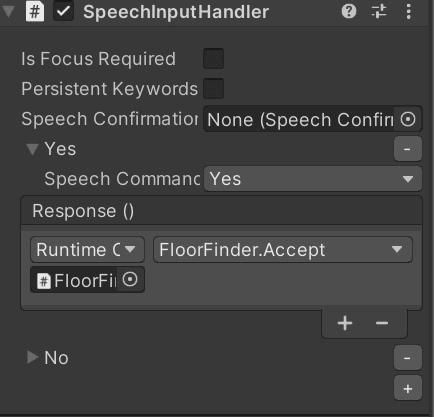

Hands-Free Interaction with MRTK Speech Recognition – Allowed users to confirm floor placement or switch zones using voice commands.

Speech input handler

This flexibility made the AR agent adaptable to different user preferences and accessibility needs, enhancing the overall usability of the system.

Challenges and Solutions

Challenge: Fragmented spatial meshes disrupted NavMesh generation.

Solution: Implemented a static plane overlay on the floor, detected via raycasting, as a workaround for real-time spatial mapping.

Challenge: Ensuring agent realism in mixed environments.

Solution: Used Animancer and IK to dynamically adjust animations and maintain a natural appearance during interactions.

Challenge: Proxemics is rarely implemented in AR.

Solution: Integrated dynamic zone recognition and real-time behaviour adjustment based on user positioning and movement.

User Testing

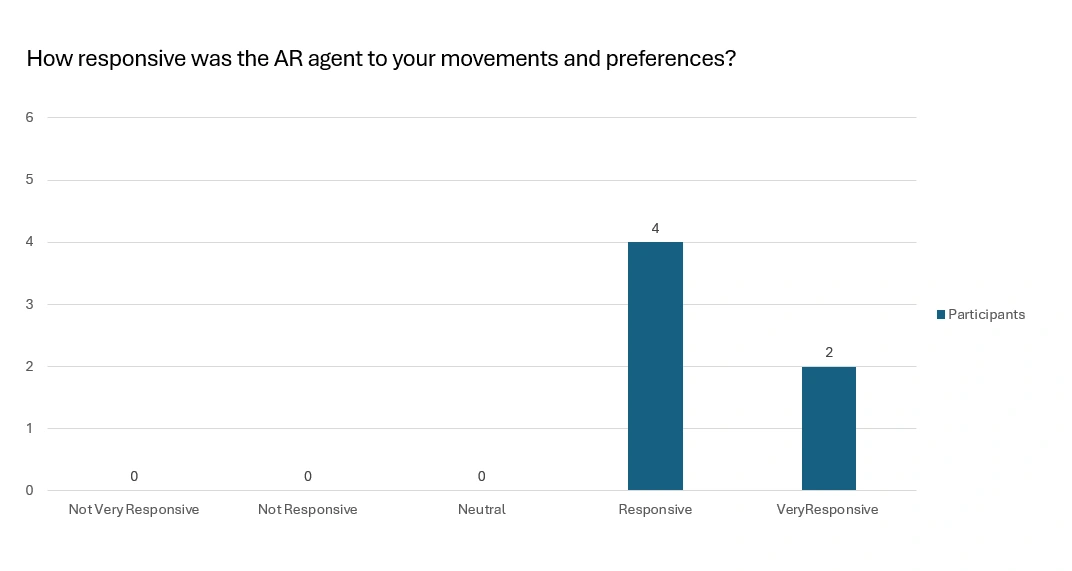

To validate the effectiveness of the system, I conducted user testing with 6 participants aged 21–30 in a controlled environment.

Methodology:

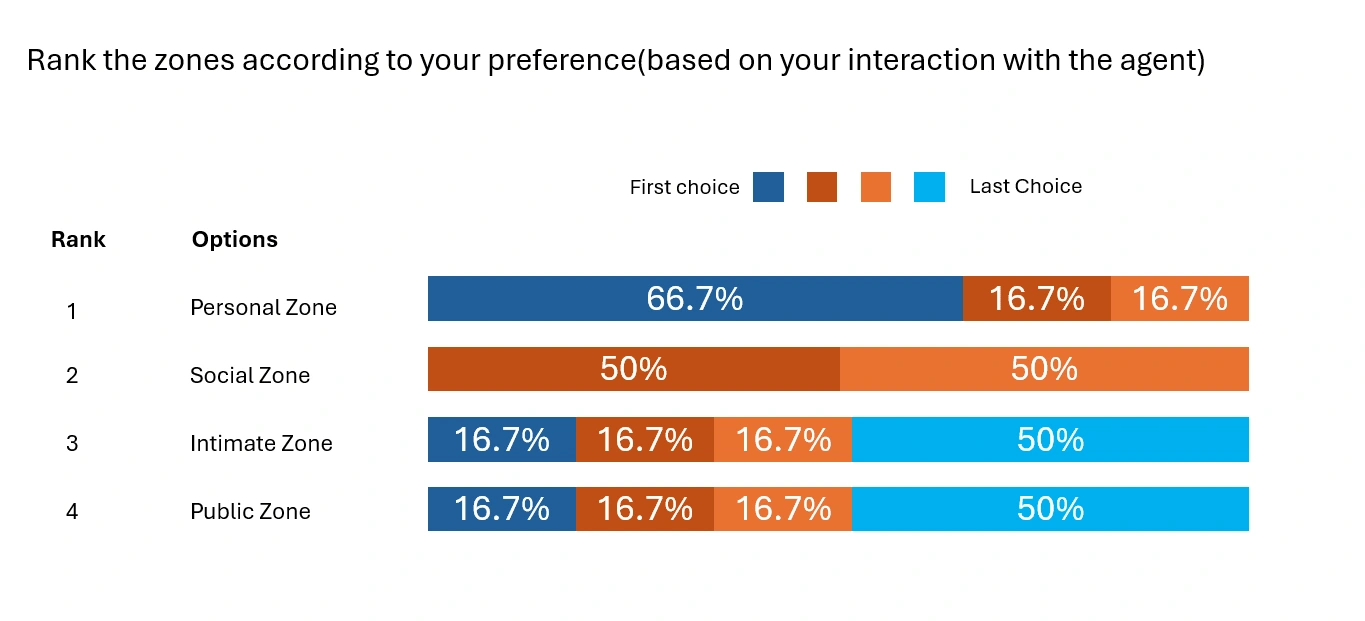

Participants interacted with the AR agent by selecting different proxemic zones (intimate, personal, social, public) through a customised user interface.

Evaluated the agent’s ability to maintain consistent proximity, adapt to user movement, and respond to boundary-breaking actions.

Key Insights

Users found the agent's movement intuitive – Real-time adaptation made interactions feel natural.

Subtle animation cues were appreciated – Small shifts, head turns, and posture adjustments helped improve believability.

Speech recognition added to accessibility – Users enjoyed hands-free interaction using voice commands.

Grounding issues were resolved – Participants noted that the agent felt anchored in the real world, rather than floating.

rating of agent's responsiveness

ranking of the zones

The animations (walking, turning, and eye contact) were praised for their natural feel and realism.

The interactive UI and speech recognition were noted as intuitive and user-friendly.

Challenges Identified:

Occasionally, the agent struggled with abrupt movements when users exited the NavMesh boundaries.

There was a minor delay in recalibrating proximity when participants moved too quickly.

Future Scope

1. Improved Dynamic Environments

Enhance real-time adaptability by integrating runtime NavMesh updates to allow the agent to navigate expanding or shifting spaces seamlessly.

2. Advanced Proxemics Customisation

Introduce features for users to define custom proximity zones tailored to their cultural or individual preferences.

3. Expanding Applications

Healthcare: Deploy proxemics-aware agents as interactive therapy assistants or virtual nurses.

Education: Integrate with AR-based learning platforms to provide personalized tutoring in immersive environments.

Retail: Use agents for enhanced customer service by guiding users while respecting their personal space.

4. AI Enhancements

Incorporate machine learning algorithms to enable agents to predict user preferences over time, refining interactions through contextual learning.

Implement gesture recognition for more intuitive interactions beyond speech and touch.

Impact and Significance

Validated Feasibility: The user testing demonstrated the practicality of proxemics-aware agents in improving user experience.

Real-World Relevance: This work bridges gaps in AR/VR design by addressing user comfort and cultural considerations, paving the way for personalised AR applications.

Future Potential: By enhancing the flexibility and scalability of the system, the technology can revolutionise industries such as healthcare, retail, education, and entertainment.

Video Demo

Tools & Skills Demonstrated

Unity, Animancer, MRTK

AI techniques: FSM, IK, NavMesh

AR/VR hardware: Microsoft HoloLens 2

Raycasting, 3D modeling, animation control

Thank you!!!

Like this project

Posted Dec 2, 2024

Created an innovative 3D AR agent that adapts dynamically to user proximity and behavior by implementing proxemics theory.