Human-in-the-loop AI Agent

tldr:

The AI agent can now use tools - code generation and accepting payments

Code generated is compiled and the errors can be fed back to the agent for further debugging.

Compiled trading bots are uploaded to GCP

User downloads the bot after successful payments.

Tech: nodejs with typescript,supabase, redis, nextjs, zustand, socket.io(websockets), temporal (workflow orchestration)

Goal based human-in -the-loop AI agent.

Here is how it works:

The goal:

The agent asks the user a series of guided questions in order to collect inputs that will be used to build a trading bot in MQL5. Once all the questions are answered, the agent generates MQL5 code and calls a batch script that runs on wine to compile the code. If there is an error with the code compilation, the error from the MT5 is logged to a file. The agent calls a function that reads the errors from this file to help resolve the code issue.

Context:

The agent is equipped with MQL5 functions that I have curated over the last 4+ years and a tool definition for all the arguments it needs to collect from the user(hence the guided questions).

The human-in-the-loop comes in to confirm that the code generated is correct and therefore the agent should execute the function that calls the wine batch script. In the event that there are no functions that implement the functionality, the agent defaults to generating the MQL5 code to the best of its ability.

Technical details:

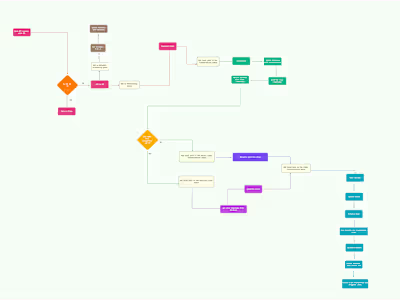

Workflows as code:

I am currently not using any AI agent frameworks as I want as much control as possible. What you can see on the right from the demo is temporal. I need the workflows to be durable and long running without the need to manually do retries.

The current flow is:

User sends the initial prompt which fires the workflow

The agent validates if the user’s message is geared towards the goal. If it's not, the agent redirects the conversation to keep it on track.

If the message answers a question that helps the agent get arguments for the code generation tool, it moves the conversation to answering the next relevant question.

Once all arguments are gathered, the agent generates the MQL5 code and waits for the user to give a confirmation if it should actually run the wine batch script.

The user has an option to confirm or reject the code implementation.

The user can also give the agent suggestions on the code fixes before the code is generated.

For the messages, and tool confirmations, I am using temporal signals that are implemented behind an express route.

LLM

For the LLM I am using Gemini. The system prompt that doesn't change is cached using the cache API. Now when new requests come in, I only pass the cache name to Gemini. This also helps reduce bloat in the temporal memory.

Database:

All the conversations are currently being stored on Supabase(Postgres). Still no fancy vector or graph db for the context. All the chat history is being passed to the agent on every message. Will hopefully circle back to that next.

Realtime chat

The main challenge with using temporal is that there is no native way to send event streams. To circumvent this, I am using redis and socket.io for the websocket implementation.

Frontend

Using nextjs for the frontend with zustand as the store.

Challenges:

The main issue now is the prompting is a bit wanting. I need to revise how I describe my functions because it's currently a hit or miss. I am also running into wine issues, where the log file sometimes just delays so much from being accessible to the agent. This means right now the agent thinks all code compilations have failed. I will focus on debugging that next but the code compilation works like a charm. Working with wine on mac is also not the best combo. The best implementation would be having the compilation happen on windows but I am using what I have.

Next steps:

Fix above issues

Better prompt engineering

Experiment with batch system prompt caching. Instead of giving gemini a huge dump, have the cache being passed based on the right context.

Experiment with different RAG techniques for the conversation history being passed to the agent

Ability to backtest on the UI even before the user gets to the code compilation step

Update 8th July:

My human-in-the-loop AI agent can now bill the user once the code is correctly compiled.

Here is the current flow:

User gives all the details needed to build an automated trading strategy

The agent generates the code for the strategy

The user can confirm or reject the code given

If they reject, they keep having the back and forth with the AI.

If they confirm, the AI fires a tool that calls wine (will move to windows eventually) and compiles the code.

The compilation logs are written to a logfile.

If the log file says the compilation was a success, the compiled trading bot is uploaded to Google cloud.

The user does not get the bot right away, they have to pay first.

So the agent goes on to gather the user's email and name. These are then used to make payments.

Once payment is successful, the user can download the bot from GCP.

So in the event there were code errors, the error is fed back to the agent and another iteration loop happens. This is basically vibecoding trading bots.

I also switched to temporal cloud instead of running it on my laptop.

The prompting is still off. Will focus more on that next!

Like this project

Posted Jul 4, 2025

Developed a fullstack human-in-the-loop AI agent with workflow as code. The AI agent also opens a chart canvas.