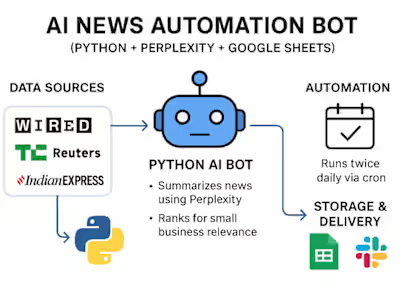

High-Performance RAG Application Development

High-Performance RAG Application

A production-ready Retrieval-Augmented Generation (RAG) application built with FastAPI, LangChain, and ChromaDB Using Replit. Supports 1000+ requests per minute with multi-user isolation and AWS deployment.

🚀 Features

Smart Load Balancing: Automatically switches between local Ollama and cloud APIs.

Multi-User Support: Isolated data and chat history per user

Multiple AI Providers: OpenAI GPT, Google Gemini, and local Ollama

Scalable Architecture: Auto-scaling on AWS with load balancing

Persistent Storage: EFS-based storage for documents and embeddings

Rate Limiting: Configurable request limits per user

Chat History: RAG-enhanced conversation memory

Admin Interface: User and file management APIs

Docker Support: Containerized deployment

Realistic Testing: Performance testing that accounts for hardware limits

📋 Requirements

Python 3.11+

Docker (for deployment)

AWS Account (for cloud deployment)

OpenAI API key or Google Gemini API key

🏗️ Architecture

🚀 Quick Start

Local Development

Clone and Setup

Configure Environment

Start Server

Test the API

Using Admin Interface

🧪 Performance Testing

Realistic Performance Test (Recommended)

Laptop-Friendly Testing

High-Load Testing (Requires Cloud APIs)

Performance Expectations:

Local Ollama Only: 5-10 RPM max

Hybrid Mode: 50-200 RPM (switches to cloud)

Cloud APIs Only: 1000+ RPM (AWS deployment)

☁️ AWS Deployment

Prerequisites

Automated Setup

Manual AWS Setup

Create Infrastructure (see DEPLOYMENT_GUIDE.md)

VPC with public/private subnets

EFS file system

ECR repository

Security groups

Deploy Application

Build and push Docker image

Create Launch Template

Set up Auto Scaling Group

Configure Load Balancer

Test Deployment

📊 API Endpoints

Authentication

All endpoints use HTTP Basic Authentication.

User Management

POST /admin/users/add - Add new userPOST /admin/users/remove - Remove userFile Management

POST /admin/files/upload - Upload PDF filePOST /admin/files/remove - Remove fileQuery

POST /query/ - Send query to RAG systemExample Usage

🔧 Configuration

Environment Variables

Rate Limiting

Default: 1500 requests per minute per IP. Modify in

main.py:Scaling Configuration

Min instances: 1

Max instances: 10

Target CPU: 70%

Scale-out cooldown: 300s

Scale-in cooldown: 300s

📁 Project Structure

🔍 Monitoring and Troubleshooting

Health Check

Logs

Common Issues

High Response Times

Increase instance size

Add more instances

Check EFS performance

Rate Limiting

Increase rate limits

Add more instances

Implement caching

Memory Issues

Use larger instances

Optimize ChromaDB settings

Implement cleanup

💰 Cost Estimation

10-minute test (300 users, 1000 RPM):

EC2 instances (2x t3.medium): ~$0.20

EFS storage: ~$0.01

Load balancer: ~$0.05

Data transfer: ~$0.01

Total: ~$0.27

Monthly production (moderate load):

EC2 instances: ~$50-100

EFS storage: ~$10-20

Load balancer: ~$20

Total: ~$80-140/month

🤝 Contributing

Fork the repository

Create a feature branch

Make your changes

Add tests

Submit a pull request

📄 License

This project is licensed under the MIT License.

🆘 Support

For issues and questions:

Check the DEPLOYMENT_GUIDE.md

Review the troubleshooting section

Check CloudWatch logs for AWS deployments

Open an issue with detailed error information

🔮 Roadmap

Web UI for administration

Redis caching layer

Multi-region deployment

Advanced analytics

API versioning

Webhook support

Advanced security features

Like this project

Posted Nov 5, 2025

Developed a high-performance RAG application with FastAPI, LangChain, and ChromaDB In replit and delpoy it on AWS EC2 Instance.

Likes

0

Views

0