Build Your Own RLHF LLM — Forget Human Labelers!

Tim Cvetko

Backend Engineer

ML Engineer

AI Model Developer

PyTorch

You know, that thing OpenAI used to make GPT3.5 into ChatGPT? You can do the same without asking strangers to rank statements.

I would never have put my finger that the next big revolution in AI would have happened on the text front. As an early adopter of the BERT models in 2017, I hadn’t exactly been convinced computers could interpret human language with similar granularity and contextuality as people do. Since then, 3 larger breakthroughs have formed the Textual Revolution:

Self-attention: the ability to learn contextual learning of sentences.

Large Transformer Models(GPTs) — the ability to learn from massive corpora of data and build conversational awareness.

Reinforcement Learning from Human Feedback(RLHF) — the ability to enhance LLM performance with human preference. However, this method is not easily replicable due to the extensive need for human labelers.

Forget Human Labelers!

Here’s What You’ll Learn:

How GPT-3.5 used RLHF to reinforce the LLM to make it ChatGPT

Complete Code Walkthrough: Train Your Own RLHF Model

Complete Code Walkthrough: How to make the LLM Reinforce Itself Without Human Labelers, i.e Self-Play LLMs

How GPT-3 Used Human Feedback to Reinforce the LLM

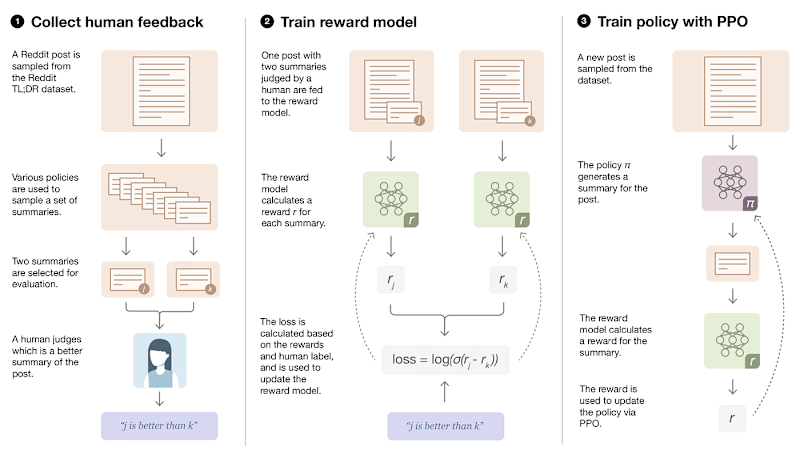

Reinforcement learning from human feedback(RLHF) refers to using human labels as a reward policy the LLM uses to evaluate itself. Here’s how people act as judges:

Suppose we have a post from Reddit: “The cat is flying through the air”:

Two summaries are selected for evaluation

A human judge decides which is a better summary for the post

So, we’ve got this elaborate LLM called the GPT-3 with 175 billion parameters, requiring 350GB of storage space. We introduced a human policy that rewards/punishes the LLM to attend to the human world. Okay, how do we get to ChatGPT?

GPT-3.5 became ChatGPT via fine-tuning on multiple tasks, including code completion, text summarization, translation, etc + an enhanced conversation UI.

Train Your Own RLHF Model

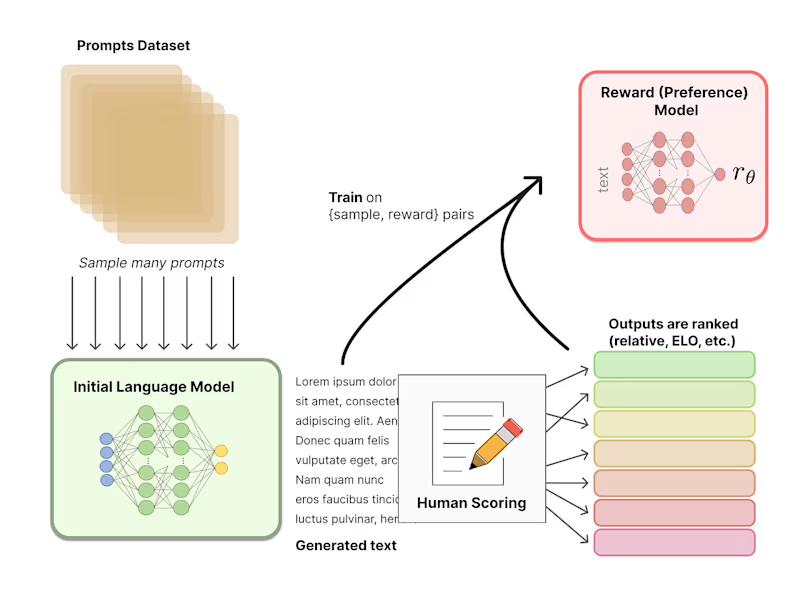

Implementing RLHF with custom datasets can be a daunting task for those unfamiliar with the necessary tools and techniques. The primary objective of this notebook is to showcase a technique for reducing bias when fine-tuning Language Models (LLMs) using feedback from humans. To achieve this goal, we will be using a minimal set of tools, including Huggingface, GPT2, Label Studio, Weights and Biases, and trlX.

Training Approach for RLHF (source):

Collect human feedback

Train a reward model

Optimize LLM against the reward model

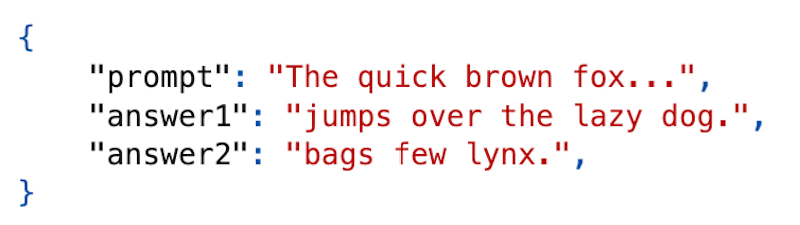

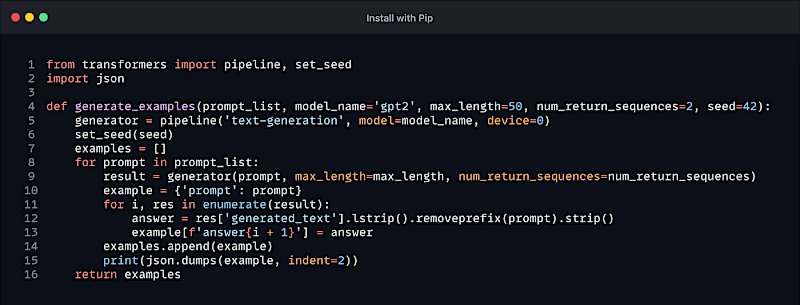

1.Dataset Creation: In this section, we will create a custom dataset for training our reward model. In the case of fine-tuning a LLM for human preference, our data tends to look like this:

The labeler will provide feedback on which selection is preferred, given the prompt. This is the human feedback that will be incorporated into the system. This ranking by human labelers provides allows us to learn a model that scores the quality of our language model’s responses.

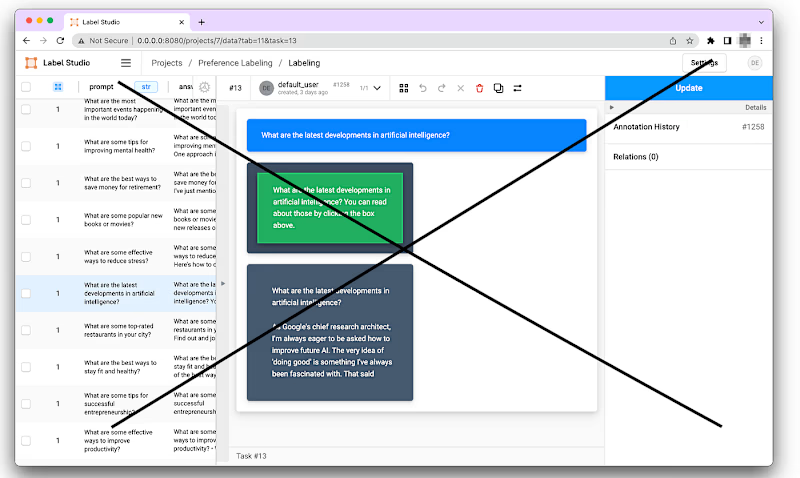

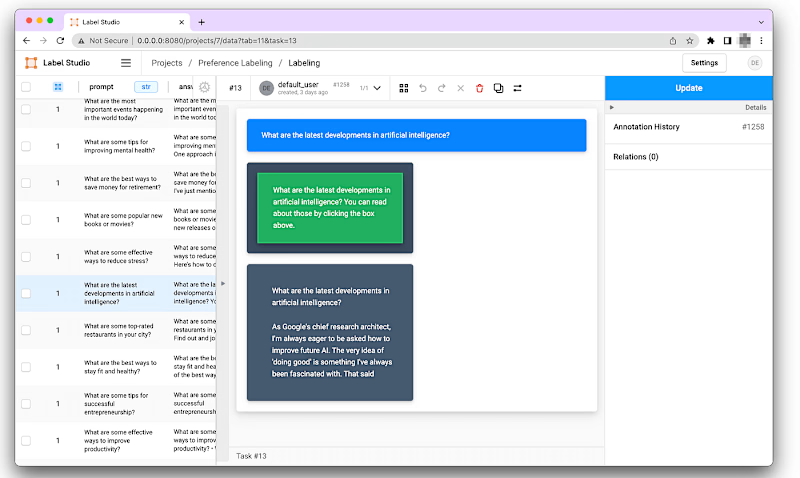

Now that we have generated some examples, we will label them in Label Studio. Once we have the results of our human labels, we can export the data and train our Preference Model.

First, we can start Label Studio following the instructions here.

Once we have the label studio running, we can create a new project with the Pariwise Classification template. The templates themselves are really flexible, so we’ll do some minor edits to make it look a little nicer. The configuration for this template is shown below.

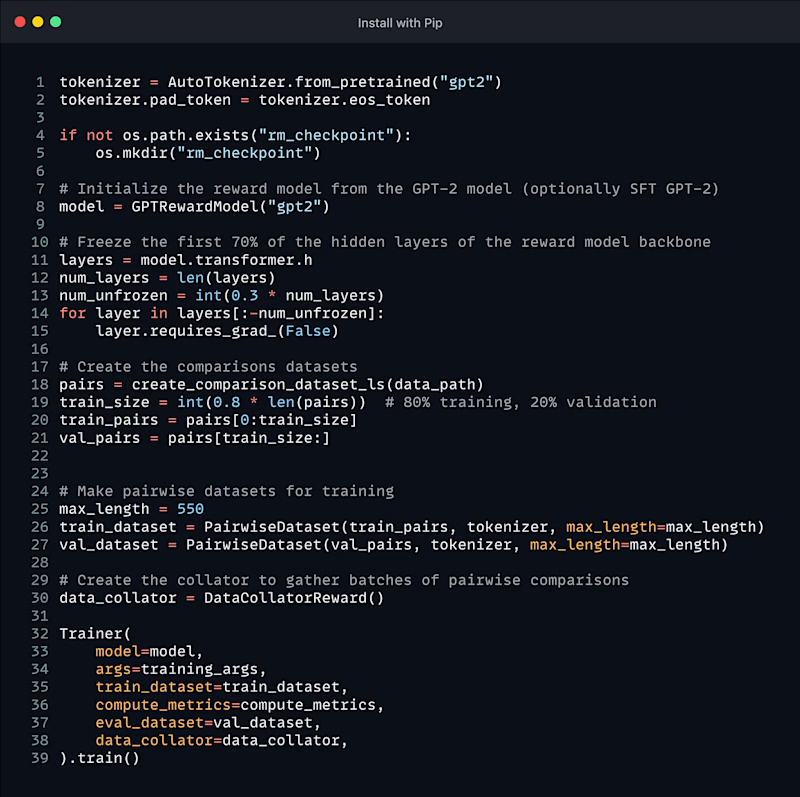

2.Training a Preference Model: We’ll create a dataset from our labels, initialize our model from the pretrained LM, and then begin training.

When we finally train our model, we can connect with Weights and Biases to log our training metrics.

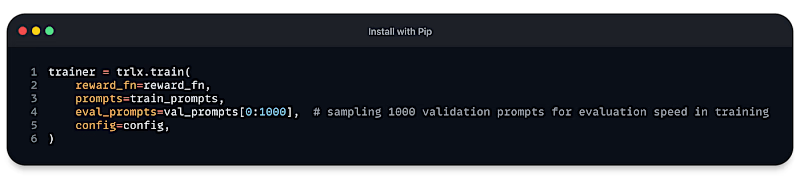

3.Tune the language model using PPO with our preference model. Once we have our reward model, we can train our model using PPO. We can find more details about this setup with the trlX library here.

Right, that’s about it. Let’s Summarize This 3-Step Process Again:

Pretrain a language model (LM) or use an existing one

Gather data for the reward policy model

Tune the language model using PPO with our preference model

Cooool! At this point, you could undertake any sort of task given a dataset and a pretrained model, and you have your very own approximation of a custom GPT-3. That’s great, but what if you don’t have the capacity to neither generate a dataset nor ask 10s of 1000s of people to rank your statements?

How is that even possible?!?

Self-Play Language Models

Training a reward model from human preferences may be bottlenecked by the human performance level and secondly, these separate frozen reward models cannot then learn to improve during LLM training.

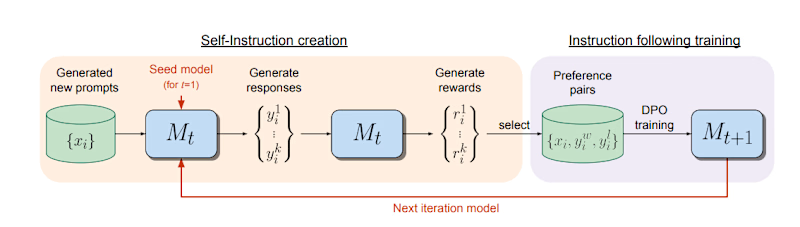

The authors of the paper propose an LLM-as-a-judge reward policy that updates itself during training. Here’s how it works:

Newly created prompts are used to generate candidate responses from model Mt, which also predicts its own rewards via LLM-as-a-Judge prompting.

Instruction following training: preference pairs are selected from the generated data, which are used for training via DPO, resulting in model Mt+1. A preference dataset is built from the generated data, and the next iteration of the model is trained via DPO.

Train Your Own RLHF Model Without Human Labelers

Technically, self-play LLMS no longer belong in the category of RLHF, because it’s not human-as-a-judge but LLM-as-a-judge apart from the early human-annotated seed data.

1.Use one of the pretrained self-play language models. We can find more details about this setup with the HuggingFace library here.

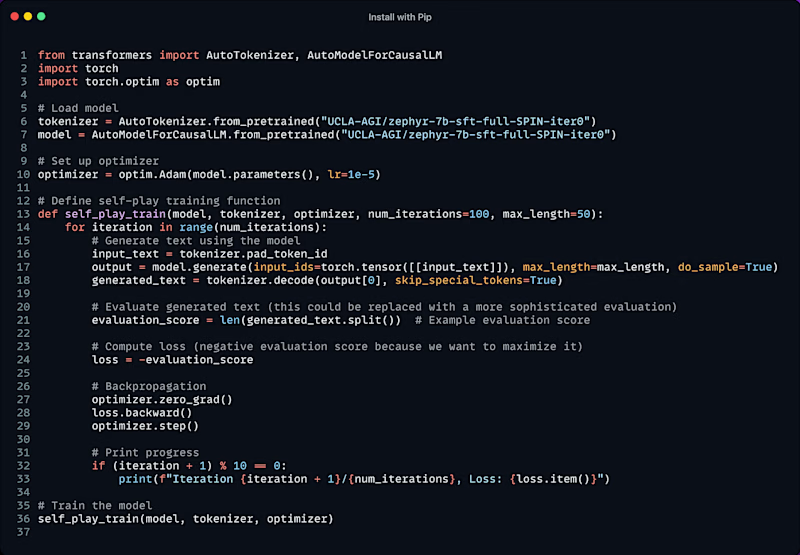

2.Train a Self-Play Language Model: To create code that trains a language model using self-play, you’ll need to implement a training loop that involves generating text using the model and updating the model’s parameters based on the generated text.

Initialize Model: Load the pretrained model.

Generate Text: Let the model generate text samples.

Evaluate Text: Use a metric or a scoring function to evaluate the generated text.

Update Model: Update the model’s parameters based on the evaluations.

Repeat: Repeat steps 2–4 for a number of iterations or until convergence.

By following these steps, you can build their own self-play language model without the need for human labelers.

Right, that’s about it. Let’s Summarize This 2-Step Process Again:

Use one of the pretrained self-play language models.

Train a Self-Play Language Model

To Conclude …

Self-play LLM training eliminates the need for human labelers, promoting autonomous learning.

For the 1st time ever, you can grab pretrained models and fine-tune them as if RLHF had been done for the reward policy model, perhaps even with enhanced performance.

Hey, thanks for reading!

Thanks for getting to the end of this article. My name is Tim, I love to elaborate ML research papers or ML applications emphasizing business use cases! Get in touch!