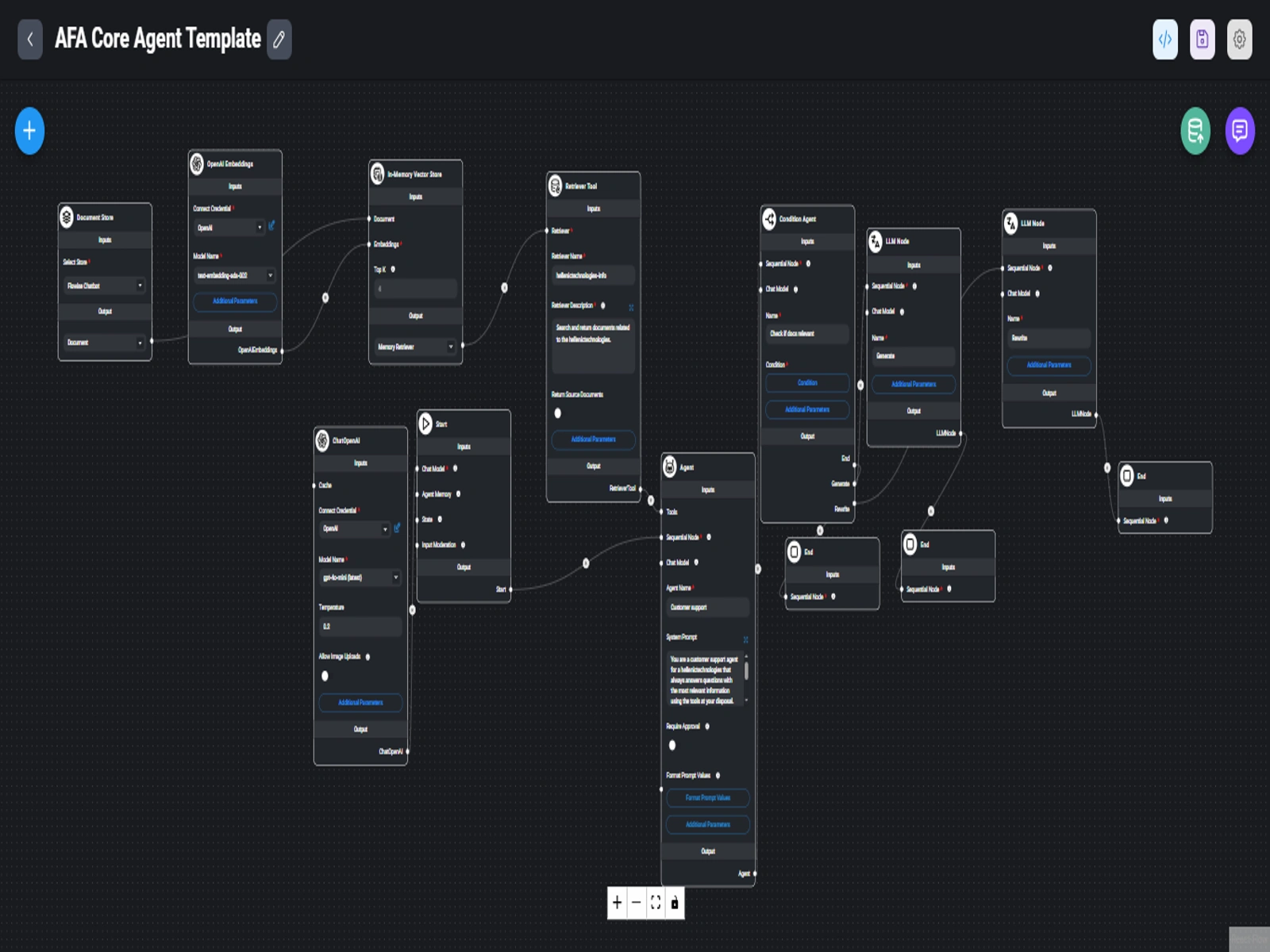

Advanced Flowise AI Chatbot Enhancement

Overview

I Set Up Advanced Flowise AI Chatbot Flows That Improve Customer Satisfaction & Reduce Support Load

Patrick came to me with a powerful Flowise setup but it had limitations:

✅ It could retrieve from internal documents (RAG).

The Challenge:

❌ But if the documents didn’t have the answer, it would hit a dead-end.

❌ There was no fallback to real-time search.

❌ No escalation logic to help the user beyond static content.

❌ Important metadata wasn’t used to boost trust in answers.

He didn’t just want an assistant

He wanted an autonomous agent that could route intelligently, search the web, and respond with real, relevant information.

My Approach

I stepped in to analyze, optimize, and rewire the entire agent flow from end to end:

Condition-Based Logic:

I implemented a smart If Condition Agent that checks if retrieved documents are relevant. If not, it triggers a new logic path.

Web Search Escalation via SerpAPI:

When the Condition Agent flags low relevance, the chatbot seamlessly routes the query to SerpAPI, pulling fresh, up-to-date answers from Google.

LLM Response Rewriting:

The SerpAPI results are piped into the LLM Node, which rewrites them into a natural, human-friendly response.

Improved Flow Control:

I cleaned up redundant nodes, disabled unused memory stores, and mapped feedback loops for future optimization.

The Result:

The chatbot is now smarter, faster, and more helpful than ever. Instead of hitting dead ends, it pivots intelligently:

✅ RAG results when docs are helpful

🌐 SerpAPI fallback when docs fail

🧠 LLM-generated answers from both sources

🔁 Scalable logic that can be extended for metadata, personalization, and escalation

Like this project

Posted Jul 13, 2025

Enhanced Flowise AI chatbot for better customer support and satisfaction.