BenevolentJoker-JohnL/Sheppard

First and foremost, a special thanks to:

Dallan Loomis (https://github.com/DallanL): without your interactions and heads up, I would still somewhat be lost and trying to figure things out more some.

My parents: without your support, I would be dead in the water.

and My son: without you, I would be dead period.

Benchmark Results Summary: Research Effectiveness: 71.4/100 Memory Effectiveness: 100.0/100 System Integration: 73.3/100 Overall Score: 82.0/100

Results Interpretation:

Research Effectiveness (71.4/100):

GOOD: Research system is performing well.

Memory Effectiveness (100.0/100):

GOOD: Memory system is performing well.

Consider optimizing recall speed for better performance.

System Integration (73.3/100):

GOOD: Systems are well integrated.

Consider optimizing multi-step reasoning capabilities.

Overall System Performance (82.0/100):

GOOD: System is performing well overall.

Focus on fine-tuning specific capabilities for optimal performance. **THESE RESULTS ONLY REFLECT THE RESEARCH FUNCTION OF THE APPLICATION

*On an i9-12900k 32gb 6000mhz DDR5, a4000 16gb GPU, running PopOS! on 4tb gen 4 silicone power m2.

Hi guys,

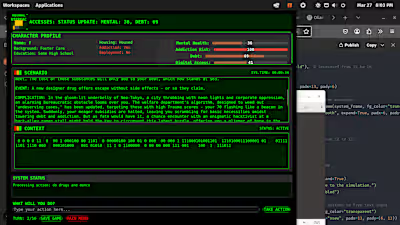

I have been studying and tinkering with the AI space for the past 2 years. I do not actually know how to code, but I am learning, and understand enough about the software development process, along with knowing how to manipulate AI that I decided to give my hand at software development and create my own AI Agent app. Yes, everything you will interact with when you download and install my application is AI generated, however, it was generated under my express supervision. There currently is a massive innundation of AI generated crap (and yes I do mean crap) that is often just assumed as crap because well... it is crap, because a vast majority of the AI generated content is put out with zero human in the loop interaction. This here, should stand as a testament and as a check, to show that quality AI content can be produced, it just needs to involve humans in the process.

I created this entire thing (and will continue to build upon this) without ANY sort of formal education in coding, or programming. Everything you see is as the result of sheer will and determination and a little bit of know how with an open mind. For those wondering, Claude is primarily used or has been used in the construction of this application. This originally was created as a bit of an experiment in replicating a more natural human memory recall, and I expanded this out as I saw fit.

Unlike other AI agent applications that utilize a "create your own agent" with a 1:1 model to role workflows, I have taken a bit of a different approach: what if each model just simply is a function of the agent?

As mentioned previously, this was originally created as a means to try and simulate more natural recall and memory mirroring that of humans. The system as it stands is divided up of 4 (see 5) models. You have a main chat model, a short context model, a long context model, and an embedding model. The memory system is meant so that when there is user input, the system queries the system for relevant emebeddings and then returns those embeddings to a relevant context handling model which summarizes the content of the embeddings and then passes it along to the main chat model as context upon which the main chat model responds to or interacts with the user input.

This is my attempt to work myself into a better position as I am currently looking for work and trying to land a position within the AI field, specifically as an AI applications designer. If you, or someone you know is hiring, please do not hesitate to reach out. I can be reached at: benevolentjoker@gmail.com.

Serious inquiries only.

I will be expanding upon this application and building out features. I plan on dual licensing a majority of what I seek to develop and release as there should be a good few features that big data/Big AI can benefit/utilize.

In general, this is meant to democratize AI as a whole and turn what software big data/IT has been utilizing for years, and turning it over to the average individual. That, and to show the world that with just a little knowledge, and the right drive you too can accomplish anything.

Shout out to William FH aka hinthornw (https://github.com/hinthornw) for trustcall (https://github.com/hinthornw/trustcall) -- this is the basis upon which later functions of the application will be based off of for autonomous tool creation later on as well as for validation of the memory system.

Also shout out to those at mendableai for creating firecrawl which is the primary basis for how the research system conducts its workflow to an extent. Without firecrawl, the research function would basically still be in development.

Sheppard Agency

Overview

Sheppard Agency is an advanced AI assistant application that combines memory persistence, web research capabilities, and conversational intelligence. It's designed to provide users with a more contextualized and knowledgeable AI experience by remembering conversation history and actively researching information when needed.

Key Features

Memory System

Short-term Recall: Remembers recent interactions within the current conversation

Long-term Memory: Stores important details, preferences, and facts across multiple sessions

Context Awareness: Connects related information between different conversations

Research System

Web Search Integration: Connects to search engines for real-time information

Source Verification: Evaluates and cites sources for credibility

Information Synthesis: Combines multiple sources to provide comprehensive answers

Autonomous Browser: Self-healing browsing with intelligent navigation capabilities

LLM System

Conversational Interface: Natural dialogue with understanding of context and nuance

Task Execution: Can perform various tasks based on user requests

Reasoning Capabilities: Processes complex questions with step-by-step reasoning

Installation

Requirements

Python 3.10 or higher

Redis server (for memory storage)

ChromaDB (for vector embeddings)

Ollama installed locally (for language models)

Setup

Clone the repository:

Create a virtual environment:

Install dependencies:

The key dependencies include:

aiohttp

rich

selenium

beautifulsoup4

backoff

tldextract

chromadb

redis

If Firecrawl integration is desired:

Set up environment variables by creating a

.env file in the project root:Prepare Local Ollama Models:

Ensure Ollama is running locally and pull the required models:

Set up directory structure:

Start Redis Server:

Running the Application

Method 1: Direct Execution

Run the main application:

Method 2: Using the ChatApp Interface

Method 3: Using Command Line Interface

Usage

Basic Interaction

Interact with Sheppard through the command line or web interface by simply typing your questions or instructions.

Commands

/research [topic] [--depth=<1-5>] - Research a topic with specified depth/memory [query] - Search through memory for specific information/status - Show current system status and components/clear [--confirm] - Clear chat history/settings [setting] [value] - View or modify chat settings/browse <url> [--headless] - Open browser to research specific URL/save [type] [filename] - Save research or conversation/preferences [action] [key] [value] - View or update user preferences/help [command] - Display available commands/exit - Exit the applicationUsing the Research System

The research system is a core feature of Sheppard Agency, providing autonomous web browsing and information extraction capabilities.

Basic Research Command

This will:

Search for information about quantum computing

Extract content from relevant websites

Analyze and summarize the findings

Display formatted results

Using the ResearchSystem Programmatically

Using Autonomous Browser Mode

The system includes an

AutonomousBrowser class that provides enhanced browsing capabilities with self-healing and intelligent navigation:The autonomous browser includes several advanced features:

Self-healing: Automatically recovers from navigation errors

Intelligent retries: Uses different strategies for different error types

Content filtering: Identifies and filters low-quality content

Link prioritization: Prioritizes links based on relevance to the topic

Rate limiting: Applies dynamic rate limiting to avoid blocking

Deep Analysis Mode

For more comprehensive research:

The deep analysis mode provides:

More thorough content extraction

Higher validation standards for sources

Comprehensive cross-source analysis

Citation extraction and verification

Firecrawl Integration

To enable Firecrawl integration for enhanced content extraction:

Set the

FIRECRAWL_API_KEY environment variable with your API keyCreate a FirecrawlConfig instance with your desired settings

Pass it to the ResearchSystem constructor

Memory Management

Memory Persistence

Embedding Generation: Uses

mxbai-embed-large via Ollama for fixed-size embeddingsDatabase Storage: Stores embeddings in ChromaDB with optional PostgreSQL and Redis integrations

Context Summarization

Short Context Summarization: Uses

Azazel-AI/llama-3.2-1b-instruct-abliterated.q8_0 for quick summarizationLong Context Summarization: Uses

krith/mistral-nemo-instruct-2407-abliterated:IQ3_M for extended summariesConfiguration Options

Memory System Settings

Setting Description Default Type

MEMORY_PROVIDER Backend storage provider redis string MEMORY_RETENTION_PERIOD Days to keep memories 90 integer MEMORY_IMPORTANCE_THRESHOLD Minimum importance score to store memory 0.5 float EMBEDDING_MODEL Model used for vectorizing text mxbai-embed-large string MEMORY_CHUNK_SIZE Maximum token size for memory chunks 500 integerResearch System Settings

Setting Description Default Type

RESEARCH_PROVIDER Search API provider serpapi string RESEARCH_MAX_SOURCES Maximum sources per query 5 integer RESEARCH_MAX_DEPTH How deep to search (1-3) 2 integer RESEARCH_CACHE_HOURS Hours to cache research results 24 integer SOURCE_CITATION_STYLE Citation format for sources mla stringLLM System Settings

Setting Description Default Type

LLM_PROVIDER LLM API provider ollama string LLM_MODEL Default language model mannix/dolphin-2.9-llama3-8b:latest string MAX_CONTEXT_TOKENS Maximum token context window 8192 integer TEMPERATURE Creativity of responses (0-2) 0.7 float SYSTEM_PROMPT Base system instructions see prompts.py stringArchitecture

Sheppard follows a modular architecture with three main systems:

Memory System:

memory/manager.py - Central memory coordinationmemory/models.py - Data structures for memorymemory/storage/ - Storage backends (Redis, ChromaDB)Research System:

research/system.py - Research orchestrationresearch/models.py - Data structures for researchresearch/browser_manager.py - Browser automationresearch/browser_control.py - Enhanced autonomous browserresearch/content_processor.py - Content extraction and analysisLLM System:

llm/system.py - LLM coordinationllm/client.py - Ollama clientCore:

core/chat.py - Main application logiccore/commands.py - Command handlingcore/system.py - System managementTroubleshooting

Common Issues:

Browser Automation Errors:

Ensure you have ChromeDriver installed or use the built-in undetected_chromedriver.

Set

headless=True for server environments.Ollama API Connection Issues:

Verify Ollama is running:

curl http://localhost:11434/api/versionEnsure required models are downloaded.

Memory Storage Errors:

Check Redis connection with

redis-cli pingEnsure ChromaDB persistence directory exists and is writable.

Firecrawl Integration Issues:

Verify your API key is valid.

Check for rate limiting issues.

Logs

Logs are stored in the

logs/ directory by default. Set LOG_LEVEL=DEBUG for verbose logging.IN PROGRESS------------------------------------------------------------

Uploading Multiple Files and Directories as a Single Project

Method 1: Import Existing Directory Structure

The most direct way is to use the import command:

The import function recursively copies all files from the source directory into your project, maintaining the directory structure.

Method 2: Upload Files in Conversation

You can upload multiple files directly in the conversation with Claude:

Upload all your files to Claude (select multiple files in the upload dialog)

Create a project and use those files:

Method 3: Batch Import for Large Projects

For very large projects, you could use this approach:

Real Example: Importing the Current Project

Let's say you want to import the chat application code you've shared with me into a Code Arena project:

After these steps, the Code Arena will:

Have loaded all your code files into the project

Maintain the directory structure from your original project

Have analyzed all the relationships between modules

Be ready to discuss, modify, and help you develop the application

How the System Handles the Files

Behind the scenes, the Code Arena:

Creates a project directory in its

projects_dir locationCopies all files from the source directory, maintaining the structure

Indexes all Python files for analysis

Extracts function and class definitions to understand relationships

Creates a virtual environment specific to this project for proper execution

Once your files are imported, you can interact with them through commands like: IN PROGRESS------------------------------------------------------------

The system maintains full context of your project structure, so when you ask questions or request changes, it can consider how those changes affect the entire codebase, not just individual files.

Would you like me to elaborate on any specific part of this process or show more detailed examples?

Adding New Features

To extend Sheppard with new capabilities:

New Memory Storage:

Create a new provider in

memory/storage/Implement the

BaseStorage interfaceNew Research Provider:

Add a new provider in

research/providers/Implement the

BaseResearchProvider interfaceNew LLM Integration:

Create a new provider in

llm/providers/Implement the

BaseLLMProvider interfaceContributing

Contributions are welcome! Please feel free to submit a Pull Request.

Fork the repository

Create your feature branch (

git checkout -b feature/amazing-feature)Commit your changes (

git commit -m 'Add some amazing feature')Push to the branch (

git push origin feature/amazing-feature)Open a Pull Request

Licensing

This project uses a dual-licensing approach:

Core functionality is licensed under the Mozilla Public License 2.0

Enterprise features require a commercial license. Contact us at benevolentjoker@gmail.com -Enterprise features include: custom fine-tuning feature tracking, fine-tuning data preparation, model-fine tuning over-time, memory-expansion for long term memory including postgresql, loading multiple files in as a single project for code development, inference training on postgresql via use

For more details, see LICENSE-CORE.txt and COMMERCIAL-LICENSE.md

Like this project

Posted Apr 9, 2025

Sheppard is an AI agent for Ollama, handling memory, automation, and tool creation using Redis, PostgreSQL, and ChromaDB. ONGOING

Likes

0

Views

4

Timeline

Oct 22, 2024 - Ongoing