Image Segmentation for Object Recognition

Technical Description:

1. Project Overview:

The project aims to perform image segmentation on maps using YOLO, a real-time object detection system, to identify and categorize different elements such as plots, roads, and other features.

2. Technologies Used:

YOLO (You Only Look Once) for object detection.

Flask for creating the web-based front end.

Python as the primary programming language.

OpenCV for image processing.

3. Workflow:

a. YOLO Model:

The YOLO model is trained on a dataset containing annotated maps. The training involves optimizing the model to recognize and classify different features within the images. The trained model is then used for inference.

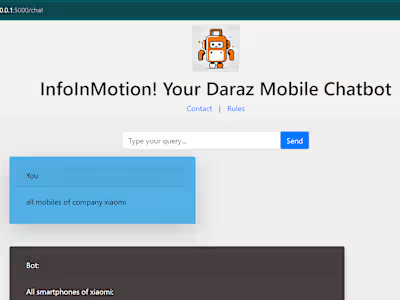

b. Flask Front End:

The front end is built using Flask, a web framework in Python. The user interacts with the system through a web interface, where they can upload map images for segmentation.

c. Image Segmentation:

When a user uploads a map image, the Flask application sends the image to the YOLO model for inference. The YOLO model processes the image and returns bounding boxes around detected objects along with their class labels.

d. Result Visualization:

The Flask application displays the segmented map to the user with bounding boxes around identified plots, roads, and other elements. The total number of each category is also calculated and presented on the front end.

Like this project

Posted Dec 28, 2023

Utilized image segmentation algorithms to accurately identify objects within images, allowing for precise object recognition in computer vision applications.

Likes

0

Views

2