My AI Animation Process

First, please enjoy this animation:

Making Animation

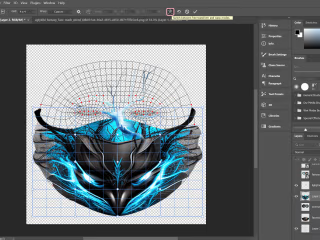

Use Blender to create 3D animation for ControlNet

Use Stable Diffusion for style

After the animation is generated, use Davinci Resolve to deflick the animation.

I create a 3D animation of a human walking using Blender. This animation will be later utilized as input for ControlNet.

The next step is brainstorming and experimenting with different art styles and refining prompts to find the most suitable style for the animation.

Once the style has been chosen and the seed has been set, it is time to proceed with generating the animation.

Typically, it takes around one and a half hours to generate 300 frames.

Making Background Music

Compose the melody and conceptualize the lyrics for the song.

Utilize ChatGPT to generate the complete lyrics based on the given concept.

Use Synthesizer V Studio to generate the AI vocal for the song.

Finish the music arrangement using Ableton Live

Here are the lyrics by ChatGPT:

Don’t you see the diamond

Reflecting your eyes

A symbol of our dreams

No one can deny

Don’t you see the ruby

Captures your smile

Embrace our inner fire,

And let your passions shine.

What else? Various Styles Fulfilled at Once

The remarkable aspect of AI lies in its ability to swiftly generate diverse styles.

The below image is a demonstration featuring four distinct styles derived from the 3D model.

Keep reading this article for additional AI animation tutorials:

Like this project

Posted Jun 7, 2023

Breakdown my AI art process step by step