Car Selling Price Prediction Model

Car Selling Price Prediction

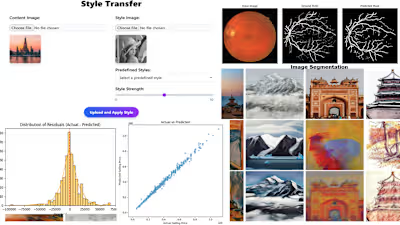

This project focuses on building machine learning models to accurately predict the selling price of used cars. It explores data preprocessing, feature engineering, regularization techniques, model comparison, and hyperparameter tuning using cross-validation.

Project Workflow

1. Exploratory Data Analysis (EDA)

Removed missing and duplicate rows

Plotted distributions of numeric and categorical features

Checked correlations and multicollinearity

2. Data Preprocessing

Log-transformed skewed features:

selling_price, km_driven, engineHandled outliers using IQR and percentile methods

One-hot encoded categorical features

Standardized numerical values using

StandardScalerBuilt preprocessing pipelines using

ColumnTransformer3. Feature Engineering

Derived new features:

car_age = current_year - yearprice_per_km = selling_price / km_drivenengine_per_seat = engine / seatsIn some experiments, source columns like

year, km_driven, engine were dropped to observe the impact4. Model Training & Evaluation

All models were trained using pipelines and evaluated using cross-validation.

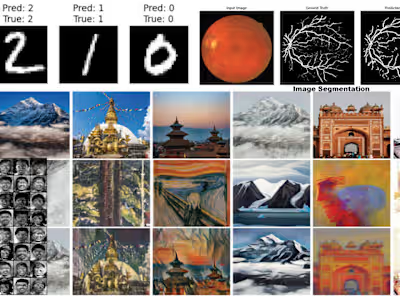

Linear Regression (with and without L1/L2 regularization)

Random Forest Regressor

XGBoost Regressor

LightGBM Regressor

Regularization:

Lasso (L1) used for automatic feature selection

Ridge (L2) used to reduce coefficient variance

ElasticNet (L1 + L2) found best parameters:

5. Outlier Impact

With Outliers:

Linear Regression: MAE = 101,229.81, R² = 0.714

Random Forest: MAE = 90,691.03, R² = 0.825

XGBoost: MAE = 89,614.51, R² = 0.846

LightGBM: MAE = 90,948.43, R² = 0.846

Without Outliers:

Linear Regression: MAE = 80,589.06, R² = 0.849

Random Forest: MAE = 71,006.79, R² = 0.860

XGBoost: MAE = 72,881.67, R² = 0.866

LightGBM: MAE = 73,807.30, R² = 0.845

Tuned XGBoost Model

Best parameters via GridSearchCV:

Best CV R²: 0.9930

Test Set: R² = 0.9933, MAE = 11480.94

Saved as

xgboost_pipeline_tuned.pklFinal Evaluation (Best Model)

MetricValue MAE11480.94 MSE284920425.62 RMSE16879.59 R²0.9933 MAPE3.15%

Experiments with Feature Engineering

When only new features were used and original columns dropped:

Test R²: 0.8577

MAPE: 15.24%

When both original + derived features were used:

Test R²: ~0.9933

MAPE: 3.15%

Conclusion: Keeping both raw and derived features provided the highest predictive power. Removing source columns (like

year, engine) decreased accuracy.How to Run

Future Enhancements

Use

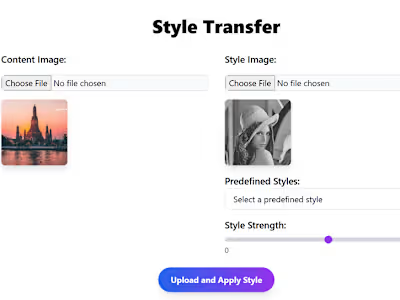

Optuna or BayesianOptimization for smarter tuningDeploy using Flask or Streamlit

Visualize SHAP or LIME for explainable AI

Try stacking or ensembling models

Credits

Developed by Abhijeet K.C. during the ML Project (2025). Dataset:

car.csvHappy Coding!

Like this project

Posted Jul 27, 2025

Built ML models to predict used car prices with high accuracy.

Likes

0

Views

0

Timeline

Jul 1, 2025 - Jul 6, 2025

Clients

Aakash Group