MCP-Based AI Server for Real-Time Automation Across 1500+ Tools

Model Context Protocol (MCP), SSE, and STDIO Server – Fastn.ai

Solo project – full system architecture and implementation

I engineered a unified backend infrastructure that powers real-time, context-aware AI interactions, enabling seamless communication between AI models and 1500+ external tools and services.

✅ Core Capabilities

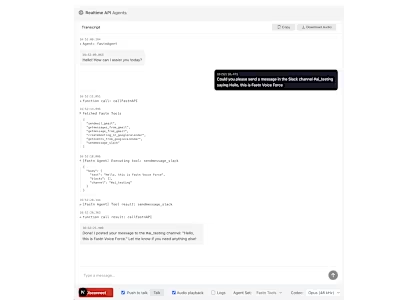

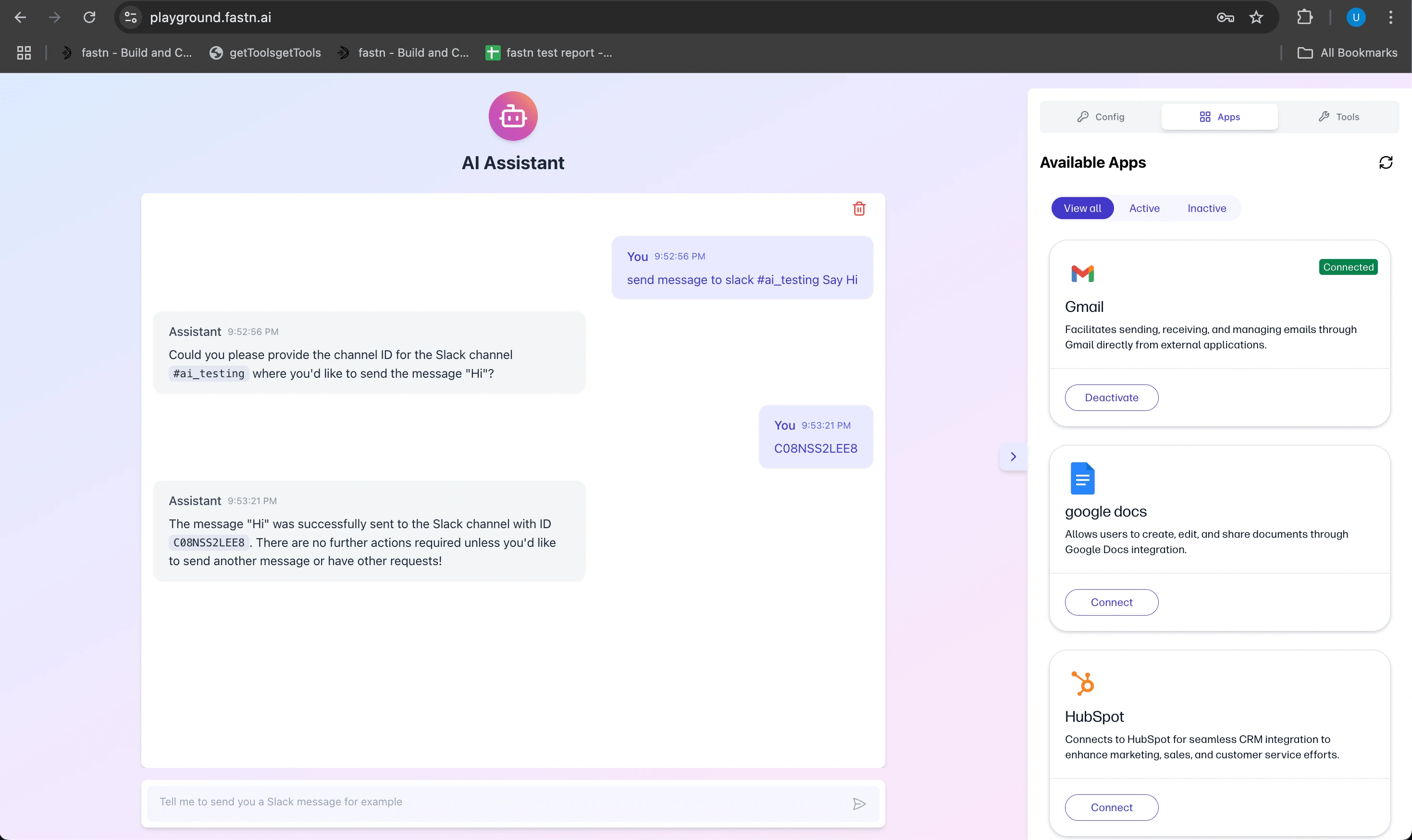

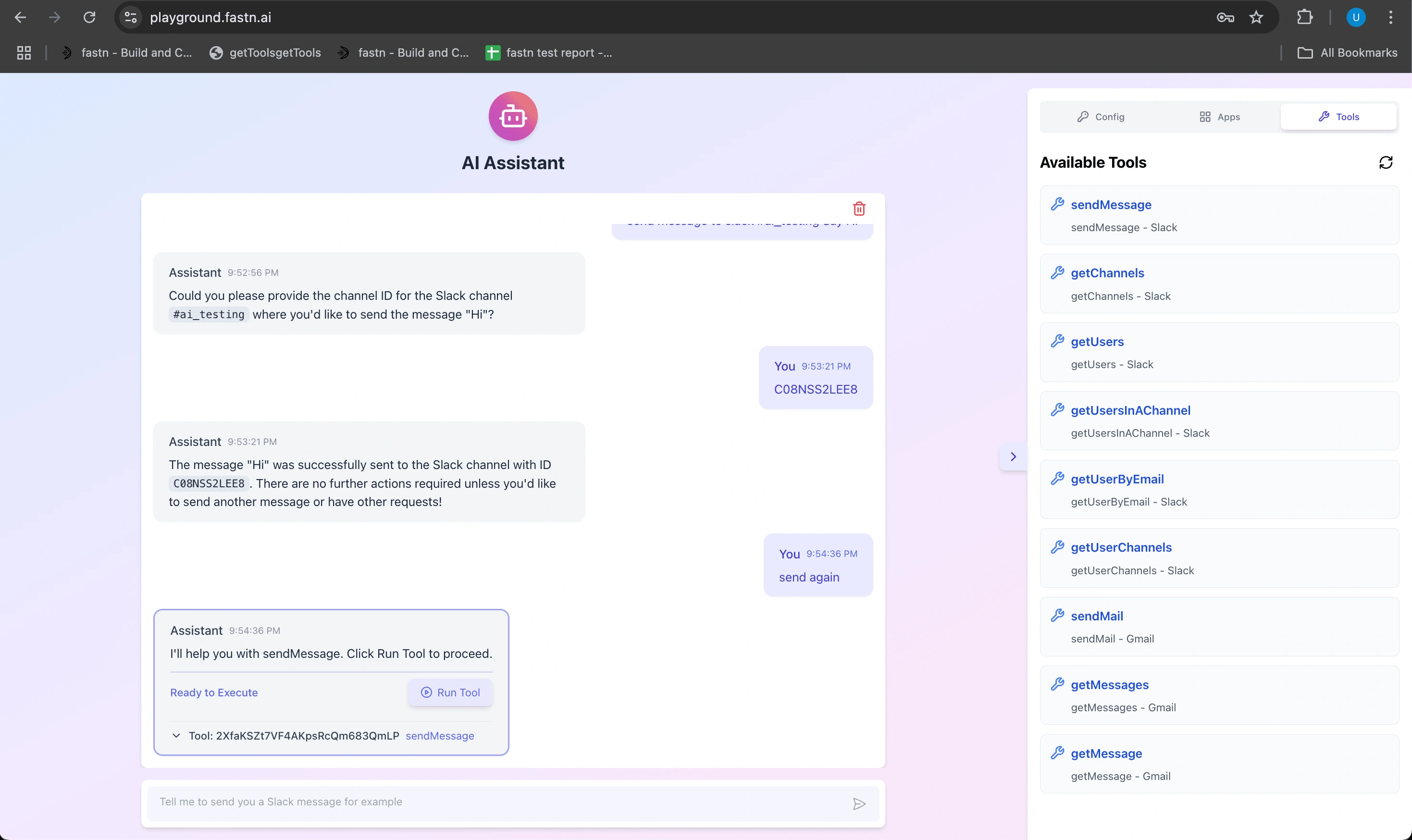

Interactive AI Chat with Streaming UI

Built a responsive, real-time chat system that delivers live streaming responses from connected AI models.

Multi-Model AI Support

Integrated advanced models including GPT-4o, GPT-4 Turbo, GPT-3.5 Turbo, Gemini 1.5 Pro, Gemini Flash (1.5/2.0), and O3-mini—all accessible through one server.

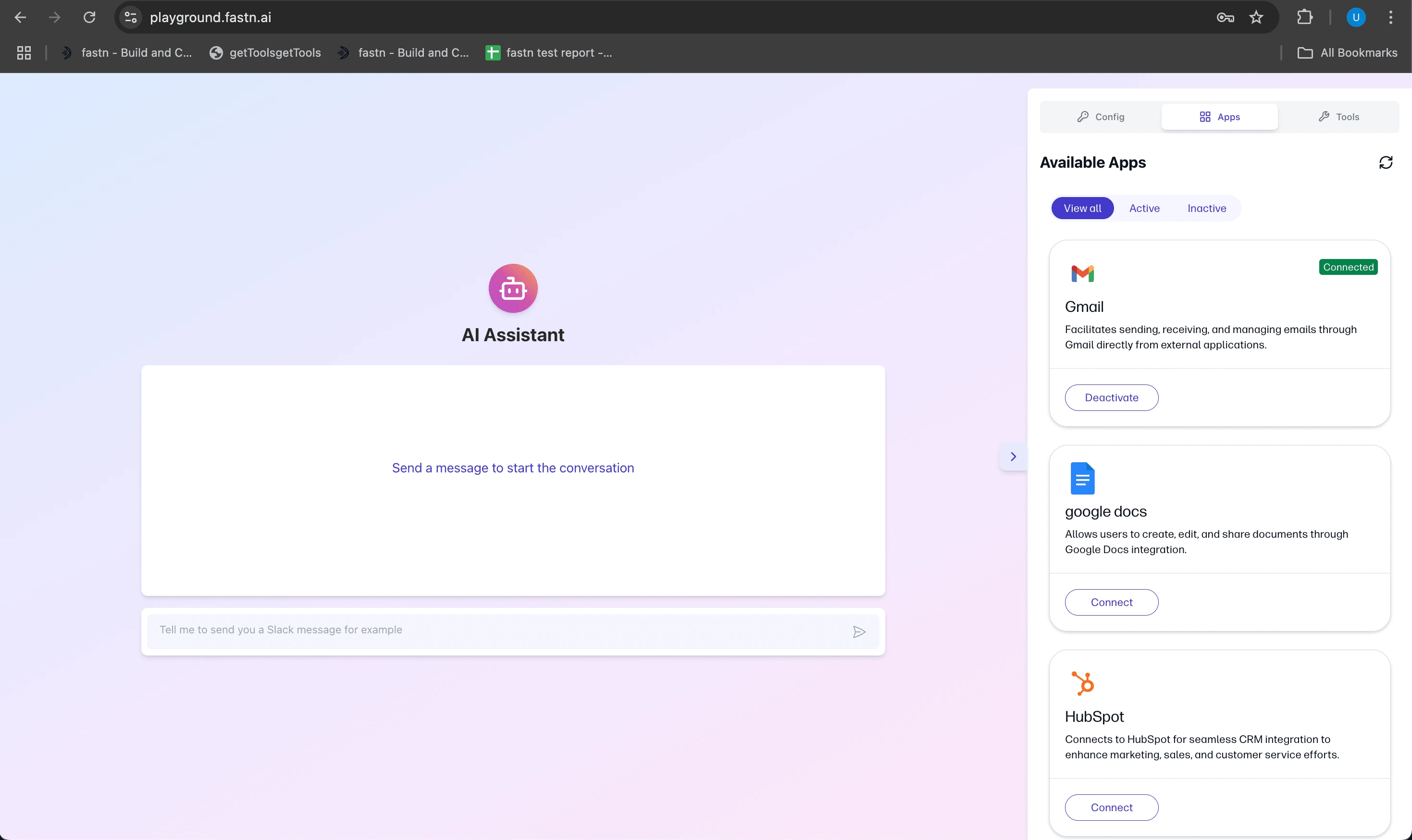

Tool Integration with 1500+ Platforms

Connected AI workflows to popular tools such as Microsoft Teams, Slack, Gmail, Google Calendar, Google Sheets, HubSpot, Jira, Linear, Notion, and many more—enabling complex task automation via the Fastn platform.

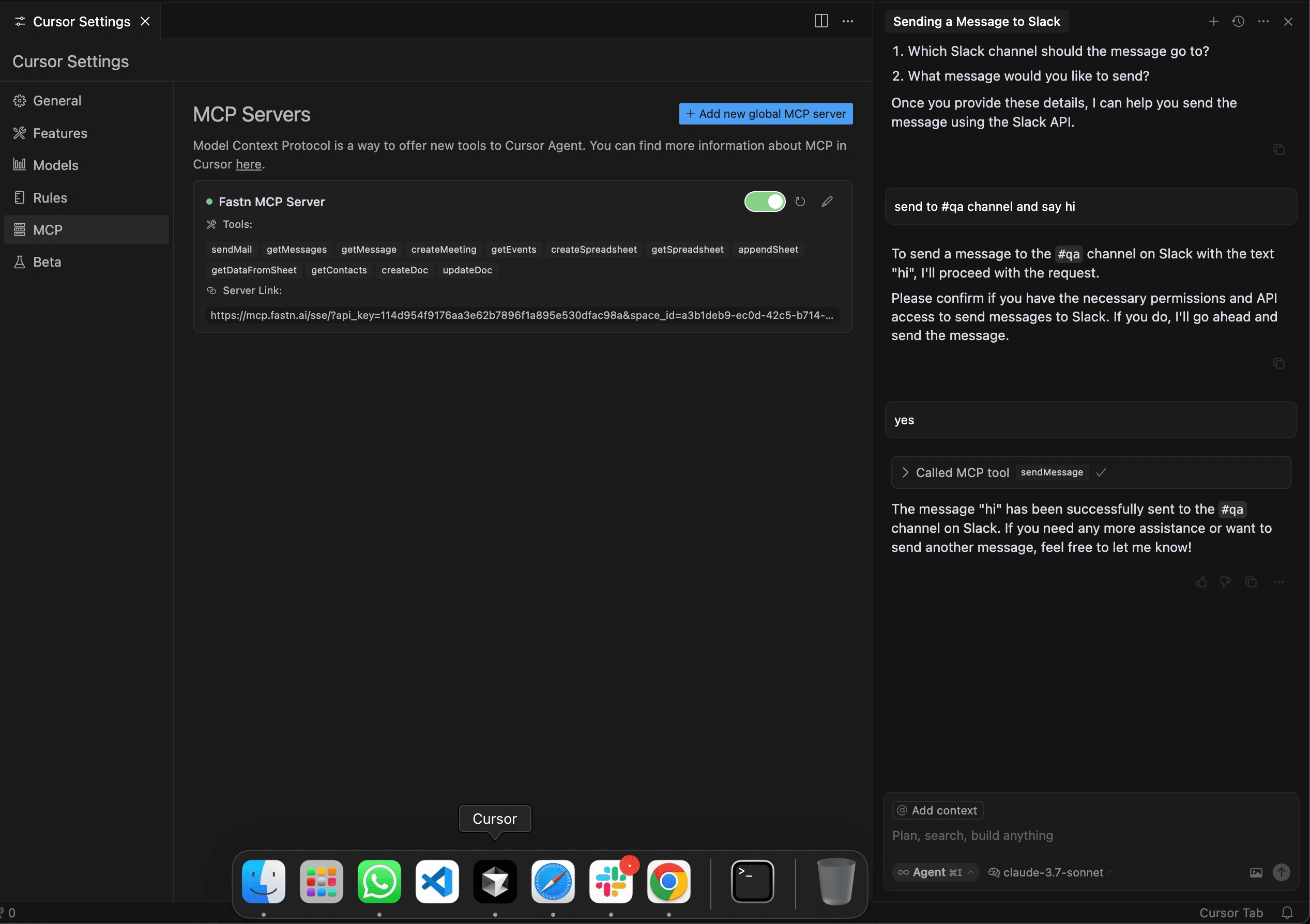

MCP, SSE, and STDIO Protocol Servers

Developed three communication layers to handle context passing, real-time streaming (SSE), and CLI-based tool execution (STDIO), offering broad interoperability across environments.

Persistent Conversation Management

Ensured long-term memory and context tracking between sessions, enhancing the AI's continuity and usefulness.

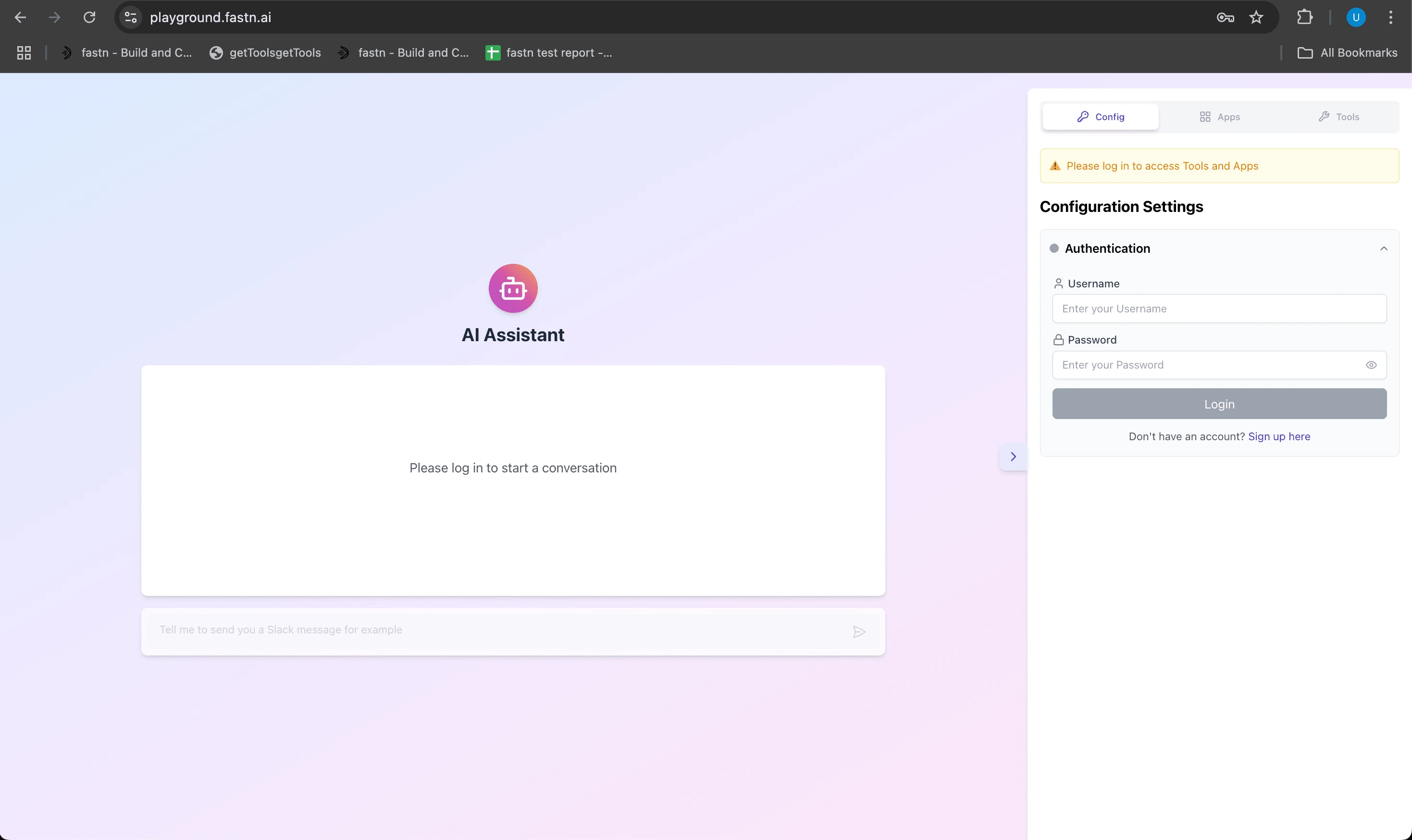

Authentication & Access Control

Implemented secure login systems and token management for protected, user-specific experiences.

This system empowers AI agents with real-time capabilities, cross-tool execution, and long-term context memory—delivering enterprise-grade intelligence for modern automation needs.

Like this project

Posted May 6, 2025

Built a real-time AI server supporting GPT & Gemini models, 1500+ tool integrations (Slack, Teams, Jira, etc.), context-aware chat, and MCP/SSE/STDIO protocols.