Development of an Intelligent RAG System for Document Management

The Challenge

Organizations struggle to efficiently search and extract information from large document collections. Manual document review is time-consuming, and traditional keyword search often misses semantically related content. Users need an intelligent system that understands context, provides accurate answers with source citations, and can handle multiple document formats seamlessly.

The Solution

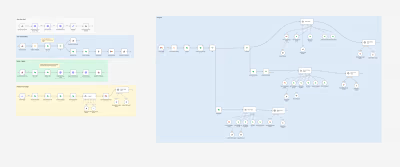

Built a RAG-powered system that combines vector embeddings with LLM generation. Documents are automatically chunked, embedded and stored in Qdrant vector database. When users ask questions, the system performs semantic similarity search to retrieve the most relevant document chunks, then uses Google Gemini to generate contextually accurate answers. The solution includes a complete document management system with upload/delete capabilities, automatic re-indexing, and clickable source references that jump to original documents.

Implementation Details

A production-ready FAQ support bot built with FastAPI and LangChain that implements RAG architecture for intelligent document question-answering. The system automatically processes and indexes PDF and text documents into a Qdrant vector database using Google Gemini embeddings. Users can upload documents, browse them in a sidebar, and ask questions through a modern chat interface. The bot retrieves relevant document chunks using semantic similarity search, augments them with context, and generates accurate answers using Google Gemini 2.5 Flash LLM.

Successfully created a production-ready RAG system that processes documents automatically, provides accurate AI-powered answers with source citations, and offers seamless document management. The system handles multiple document formats, maintains conversation context, and provides instant access to source materials through clickable references. Deployed on Railway with zero-configuration startup indexing.

Loading YouTube video...

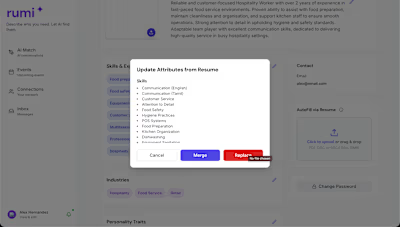

An AI-powered networking platform that connects people based on skills, services, and synergies rather than just titles or companies. Features semantic matching, interactive AI chat, letter writing, and event management.

Intelligent quiz application that generates personalized questions from study materials using RAG (Retrieval Augmented Generation), providing real-time feedback and source references to enhance learning efficiency.

Like this project

Posted Jan 19, 2026

Developed an RAG system for efficient document information retrieval.

Likes

0

Views

0