AI Search Optimization Platform

Like this project

Posted Jan 25, 2026

SaaS tracking brand visibility across AI search engines. Built with Trigger.dev queues, Langchain for unified AI APIs, and Supabase row-level security.

Likes

1

Views

0

Timeline

Aug 7, 2025 - Oct 7, 2025

AI Search Optimization Platform

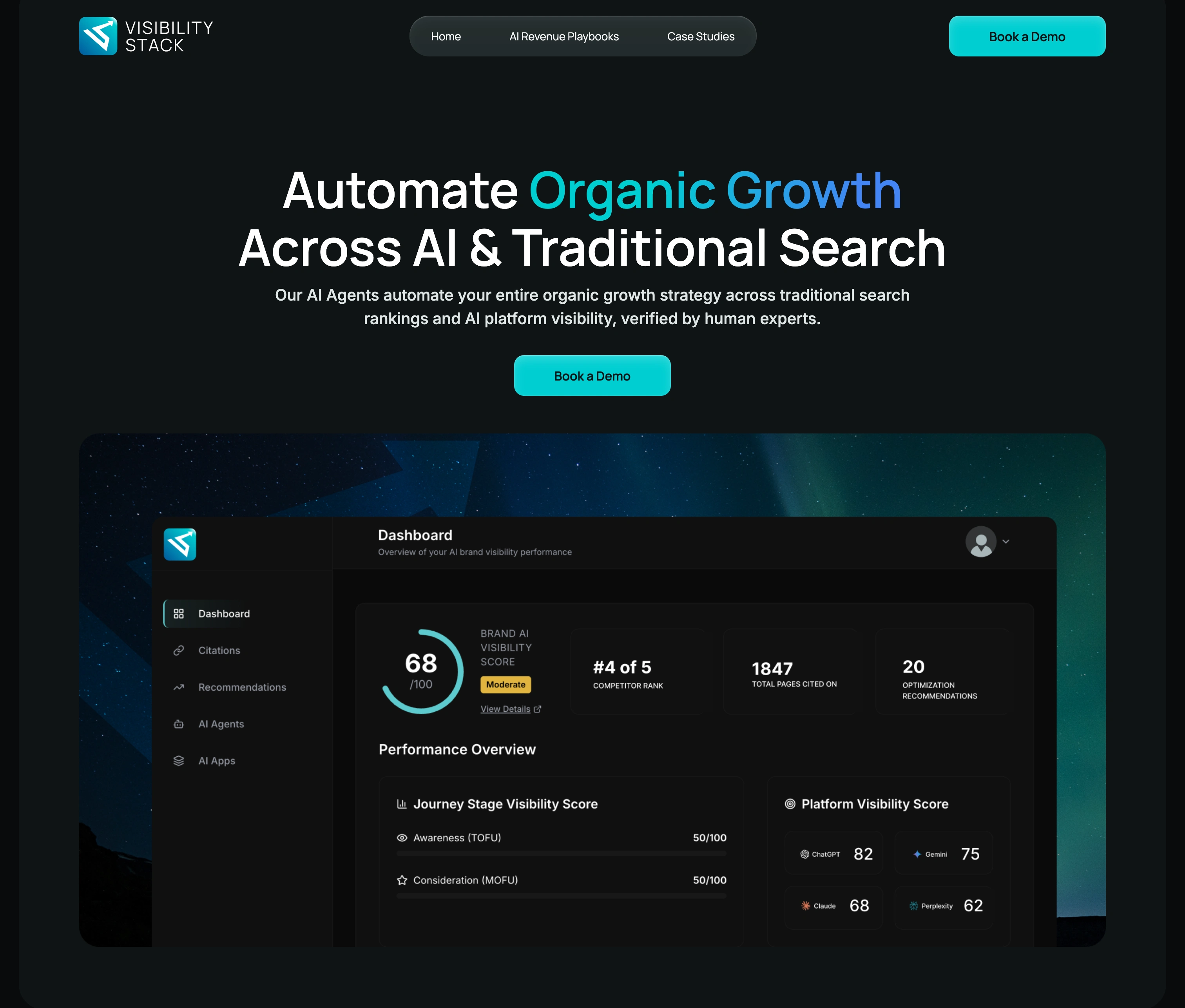

VisibilityStack tracks how brands appear in AI search results across ChatGPT, Claude, Gemini, and Perplexity. Marketing teams use it to see when (and whether) AI recommends them over competitors.

What Made This Hard

Rate limits across four different AI providers. Each API has different limits, pricing, and failure modes. The platform queries each provider hundreds of times per customer, per day. Hit the limits, you get data gaps. Exceed budgets, you bleed money.

Onboarding data has to be precise. Users input their brand, competitors, and custom prompts to track. If this data is wrong, every visibility score is garbage. I built strict Zod validation for each field, plus an admin approval step before accounts go live.

Background processing at scale. Visibility checks run continuously, not on-demand. The system executes prompts across all four AI platforms, parses responses, extracts citations, calculates scores, and updates dashboards. This happens for dozens of customers with hundreds of prompts each.

How I Built It

The core insight was treating AI requests as jobs, not synchronous calls. Everything goes through a Trigger.dev queue that respects per-provider rate limits, retries failed requests with backoff, and pauses one provider while continuing others if it hits limits.

For AI integration, I used Langchain to create a unified interface across all four providers. The same prompt goes to ChatGPT, Claude, Gemini, and Perplexity. Responses get normalized and aggregated.

The superadmin system lets our team review pending registrations, edit user configurations, and view any customer's dashboard without logging in as that customer. That last part required encrypted session tokens and row-level security in Supabase. Admins see scoped data, not full database access.

What I'd Highlight

Queue architecture handles 500+ daily AI requests without hitting rate limits

Admin approval workflow catches bad data before it corrupts tracking

Secure "view as user" feature cuts support time in half (we can see exactly what customers see)

Adding a fifth AI provider would take about a day, just add another Langchain adapter

Tech Stack

Frontend: Next.js, TypeScript, Tailwind CSS, Shadcn UI, Zod

Backend: Supabase (PostgreSQL, Auth, Row-Level Security)

Queue/Background Jobs: Trigger.dev

AI Integration: Langchain, ChatGPT API, Claude API, Gemini API, Perplexity API

Email: SendGrid

Deployment: Vercel