ShubhamKrSingh21/Objectify

Objectify - Advanced Object Detection on Satellite/Regular Images

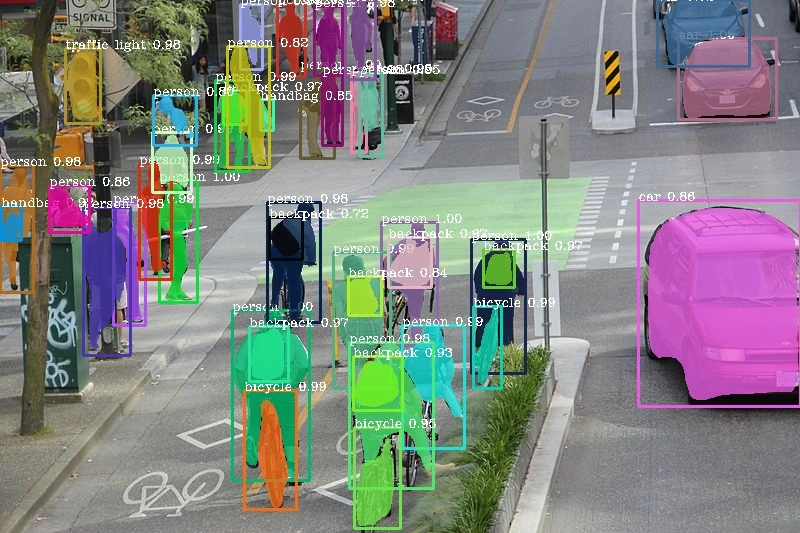

Object Detection and Instance Segmentation -

Vehicles (Truck, Bus, Boat, Airplane)

Roads Signage - (Zebra Crossing, Traffic Light)

Man-Made Architectures - (Buildings, Bridges)

DataSets

COCO - The MS COCO (Microsoft Common Objects in Context) dataset is a large-scale object detection , segmentation, key-point detection, and captioning dataset. The dataset consists of 328K images.CityScapes - Cityscapes is a large-scale database which focuses on semantic understanding of urban street scenes. It provides semantic, instance-wise, and dense pixel annotations for 30 classes grouped into 8 categories (flat surfaces, humans, vehicles, constructions, objects, nature, sky, and void). Data was captured in 50 cities during several months, daytimes, and good weather conditions with over 25k images.Objectives :

Fully Working Web application : Allowing the user to Upload Image.

Perform

Instance Segmentation plus Object Detection - Creating Annotations over the Uploaded Image with Bounding Boxes and Class Names, and Pixel Labelling.Displaying the Output Image with the Annotated Object on it.

Our Idea :

Our web app takes an input image from the user using

JavaScriptThe respective image gets saved in the locally hosted centralised

SQL database.The model will fetch the object and will detect the same, using the libraries

tensorflow, pytorch & pixellib with Deep Learning Models such as PointRend and MobileNetV3.The input image gets annotated using

cv2 libraries.The

annotated objects that has been detected, gets displayed along with the original uploaded image via the Django Backend.The model gives results in the form of a

JSON (JavaScript Object Notation) format and the output is displayed with CSS and HTML website on the local web server.🛠 Tech Stack

⚙️ What is Object Detection, Instance & Semantic Segmentation?

Object Detection is a computer vision technique for locating instances of objects in images or videos. Object detection algorithms typically leverage machine learning or deep learning to produce meaningful results. When humans look at images or video, we can recognize and locate objects of interest within a matter of moments. The goal of object detection is to replicate this intelligence using a computer.Instance Segmentation is identifying each object instance for every known object within an image. Instance segmentation assigns a label to each pixel of the image. It is used for tasks such as counting the number of objects in an image along with object localization.⚙️ Object Detection using Pointrend Model

For performing

segmentation of the objects in images and videos, PixelLib library is used, and so we have invoked the same in our respective project. PixelLib provides support for Pytorch and it uses PointRend for performing more accurate and real time instance segmentation of objects in images and videos. Hence, annotations over the image takes place once the work is done.⚙️ Instance Segmentation using MobileNetV3

The implementation of the

MobileNetV3 architecture follows closely the original paper and it is customizable and offers different configurations for building Classification, Object Detection and Semantic Segmentation backbones. Furthermore, it was designed to follow a similar structure to MobileNetV2 and the two share common building blocks. The MobileNetV3 class is responsible for building a network out of the provided configuration. The models are then adapted and applied to the tasks of object detection and semantic segmentation. For the task of semantic segmentation (or any dense pixel prediction), we propose a new efficient segmentation decoder to achieve new state of the art results for mobile classification, detection and segmentation. Finally, the project tries to faithfully implement MobileNetV3 for real-time semantic segmentation, with the aims of being efficient, easy to use and extensible.⚙️ Instance Segmentation vs. Object Detection

Object Detection

Instance Segmentation

Like this project

Posted Mar 28, 2024

Contribute to ShubhamKrSingh21/Objectify development by creating an account on GitHub.

Likes

0

Views

3

Clients

Flask

DataSet Analysis