Secret Hitler.io

Vignesh Joglekar

Platform Engineer

Web Developer

Database Administrator

MongoDB

Node.js

React

Redis

Overview 🔎

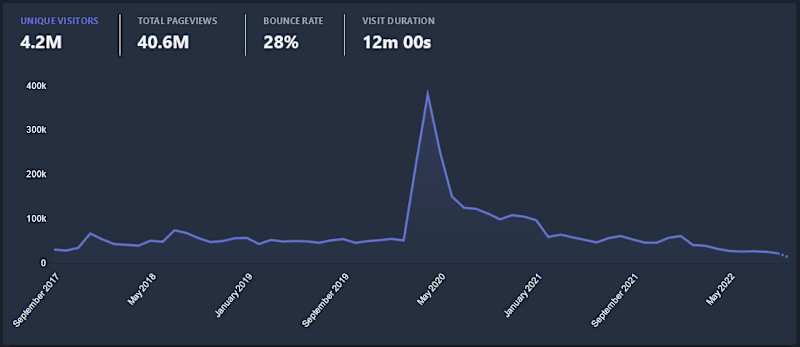

From humble beginnings as a personal portfolio addition project created by a single developer back in 2017, to reaching ~20k daily active users and over 1.5 million games played, several infrastructure, scale, and codebase quality and maintenance issues were bound to occur. With my involvement in the project starting in late 2018, to quickly being thrust into a majority contributor, to a lead developer, eventually to a site administrator position, nearly all of these issues were placed upon my plate to solve whilst still keeping up with the feature requests and improvements for a continually growing playerbase.

Problem & Solution 🤝

With the playerbase spiking nearly 800% within the course of a month, to active games jumping by a factor of 10 - the strain on the single threaded and single process server was insurmountable, all whilst a persistent DDoS attack was also continuously affecting the server. After nearly 2 years of configuration changes, new implementations, and many, many series of back and forth with major overhauls to the server architecture - we are finally improving upon the peak performance we once achieved.

Goals/Requirements The following were our primary goals and hopes to accomplish as part of this long-term resolution - in no particular order.

Defend against a medium-scale naive DDoS attack

Resolve performance bottlenecks caused by 800% playerbase growth

Improve efficiency to reduce server throughput over socket connections

Reduce unnecessary and potentially malicious socket round-trips

Implement broad-spectrum logging and server status capabilities

Process 🛣

Across working through these issues, the first task taken to boot was to implement a broad logging and status infrastructure extension, which incorporated logs from all services running to keep the site health at its best, all whilst not adding a significant amount of throughput to the server and maximizing the at the time limited server storage bucket (increasing the bucket size would have added undue and unnecessary cost and downtime). The primary solution utilized here was to implement logging filtration systems for pre-existing logs from running services (MongoDB, Nginx, and Redis) - this filtration system worked on-machine for the server and based on signals of server latency, resource usage, and active network throughput would save and emit the past 5 minutes worth of logs as a webhook to an external log-consuming service, all whilst deleting the other remaining logs from more than 1 hour prior to that instance to again minimize the growing load on server logs. Alongside the built-in logging systems, we implemented extremely detailed engine-level logging for the node and socket processes that were also passed through the same filtration system.

With these logging and metrics producers set up, the next hope was to reduce the effect of the relatively naive DDoS attack, once again without adding unnecessary CPU overhead to the already strained infrastructure. The one and only way we were able to come up with to resolve this problem was to utilize Cloudflare as a network perimeter, hoping that by entirely segmenting the server network from the internet outside of its inlet through Cloudflare, we could help limit the DDoS attacks that were simply relying on large-scale request spam and overloaded header size attacks. At first, this was more than enough - Cloudflare's built-in DDoS prevention coupled with Javascript Managed Challenges and their Bot Fight Mode allowed for the DDoS attack to be entirely mitigated, for now. (More on this later).

Improving efficiencies and server overhead to help resolve issues caused by the spike in legitimate users caused by the Covid-19 pandemic and the instant spike in online traffic, and especially those centered around entertainment was enormously important for a site such as ours. This was further impacting us simply by the demographic that we served being largely school-aged students, who were most affected by the increase in available online-time due to the pandemic, and were flocking to our site at the time. Through the logging capabilities added earlier on, we were able to quick determine at least a few instances of poor optimization throughout our codebase in the form of excessive unnecessary round-trips for various actions, combined with poor execution time and time complexity for largely trivial tasks. Both were largely a cause of the open-source nature of our website, which while had its enormous advantages in the form of recruiting and retaining consistent development talent, also consisted of significant overhead on the maintainers of the site (largely myself) which resulted in several instances of functional, but not necessarily thoroughly reviewed or optimized code finding its way into the codebase; multiply this by over 65 contributors over 4 years, and the technical debt was sure to pile up. I'm not going to bore anyone with the details of this resolution, just know that it was a large undertaking of a >15k line refactoring of the codebase - which admittedly was a lot of housekeeping, formatting, and re-organizing, but still was entirely necessary and did include significant reductions of server overhead for simple tasks helping to bring down our load in regular scenarios.

Our Contributors are truly fantastic, and those that have stuck around have made a significant difference to the course and life of the site

I did want to take a second here to mention that whilst a lot of the technical debt this project faced was a result of the fragmented and large contributor community we had accumulated (myself included here, I was nearly 50 pull requests in before joining the team), they really were the lifeblood of the site, combined with our moderation and administration team. Without them all, odds are the site would have crumbled a lot quicker; likely largely due to the nature of the internet and the number of potentially problematic actors on nearly any public forum. But even as our maintainer team (myself and the original author) worked through various full-time roles and responsibilities, this team was able to keep the site up and running with one of us simply sitting around "pushing buttons and clicking yes" (quote from the original author of the site) - thereby preventing the site from ending up yet another abandoned online forum.

Back to the final issue we faced

Finally, as we thought we were out of the woods and had resolved all the issues with the site, disaster struck once more. Our once naive DDoS attack had developed into a much more sophisticated attack, utilizing various newly discovered inefficiencies and potentially exploitable aspects of our stack against us - namely the socket and semi-static infrastructure of some pages. That's what we get for being entirely open and open-source I guess - with some investigation we even determined our DDoS'er was a previous contributor to the site, who had been specifically guided in fixing various systems throughout the codebase by myself and other active developers, go figure.

This was ultimately a long stretch of unresolved issues on our end, with various fixes and improvements ultimately not being enough simply as anything we did was clearly public and gave our DDoS'er a clear picture of potential other unresolved issues or inefficiencies, forcing us to largely rely upon finding and fixing all of them as best as we could, in a game of cat and mouse that went on for nearly 10 months! Luckily, with some resilience, and once again our incredible committed contributor team (or at least the subset of them), we were once again able to regain control of our site, and get it once again ready for players to utilize as they once knew.

Results 🎁

Fortunately, ever since the last of our major changes were deployed the performance of the site has finally surpassed that of the original peak we once achieved, all whilst also continuing to continually fend off a 10Gbps DDoS attack, all largely arriving over a relatively unprotected socket.

Takeaways 📣

All in all, while solving a lot of these issues was an incredible amount of effort to boot - one of the biggest things I learned was easily that the most important aspect to any large-scale performance or efficiency bottlenecks comes down to a clear and efficient logging and status system - for without both of those being in place, odds are the alternative of the site shutting down for good would have been inevitable.

2018