What data sources is the scraper allowed to access?

Identify the websites or databases the data scraper will access to collect information. Make sure these sources are legal to scrape and allow automation tools. Verify if any licenses are required for certain restricted access.

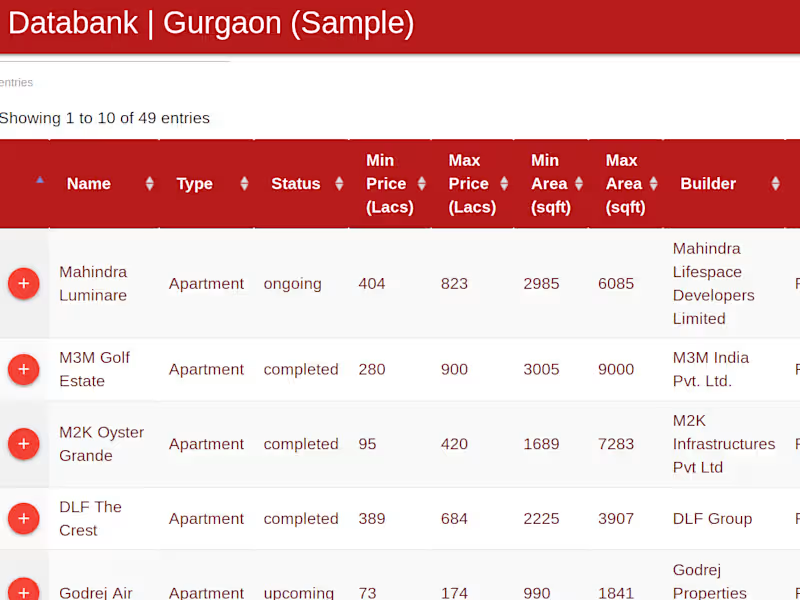

What format and structure should the scraped data have?

Clarify how the data should be organized, such as in a spreadsheet or specific database format. Define the field names and data types to ensure the scraper captures information correctly. Use examples to demonstrate the expected format, making it easier for children to understand.

How often does the data need to be updated?

Determine the frequency of data collection, whether daily, weekly, or monthly. Frequent updates may require more complex scraping tools and planning. Explain in simple terms how often new data should be collected to keep it fresh.

What are the project's start and end dates?

Set clear deadlines for when the data scraping project should start and finish. Knowing start and end dates helps with planning and resource allocation. Talk about how important it is to have a timeline set for keeping things on track.

Are there any regional laws in Karnataka to consider for the data scraped?

Check for any specific laws in Karnataka that might affect data scraping activities. Explain how local regulations can affect what data can be legally collected. Compliance is vital to avoid legal issues during the project.

What special requirements does the project have for data scraping in Bangalore?

Consider any unique challenges or data needs specific to businesses or infrastructure in Bangalore. Discuss any regional databases or websites that need special access or techniques. Emphasize how customs in Bangalore can influence data retrieval.

How will you ensure the data's accuracy and reliability?

Discuss methods to verify the accuracy of the scraped data, like cross-referencing or automated checks. Explain how maintaining data quality is important for the overall success of the project. Use examples to show how errors can change the results.

What security measures are required for handling the scraped data?

Identify steps like encryption or access controls to protect the data collected. Stress the importance of security to keep private information safe. Highlight simple techniques to ensure data is only seen by those who need it.

Do the scrapers need to be adapted for local languages in Karnataka?

Consider if language support for Kannada or other local languages is needed when scraping data. Local language adaptation can increase the usability of data gathered from regional websites or sources. Discuss how language adjustments support wider accessibility.

How will the data be stored after collection?

Define the storage solutions like cloud databases or local servers where the data will be kept. Explain how choosing the right storage impacts data accessibility and durability. Make sure storage aligns with the data security and compliance needs.

Who is Contra for?

Contra is designed for both freelancers (referred to as "independents") and clients. Freelancers can showcase their work, connect with clients, and manage projects commission-free. Clients can discover and hire top freelance talent for their projects.

What is the vision of Contra?

Contra aims to revolutionize the world of work by providing an all-in-one platform that empowers freelancers and clients to connect and collaborate seamlessly, eliminating traditional barriers and commission fees.

People also hire

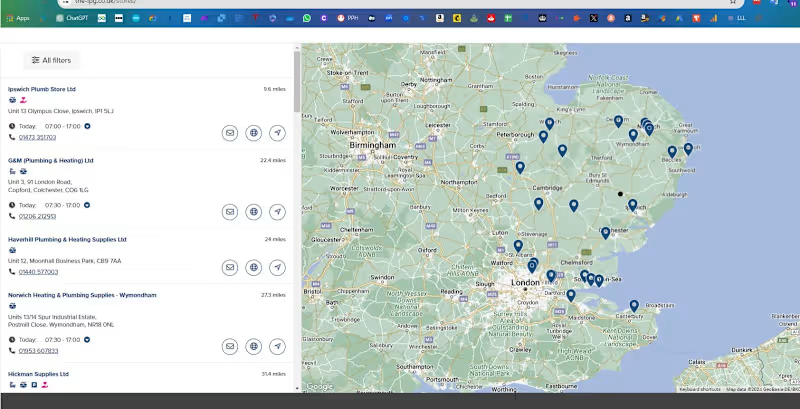

Explore projects by Data Scrapers in Karnataka on Contra

Top services from Data Scrapers in Karnataka on Contra

AWS

Cloud Infrastructure Architect

Data Scraper

+5

Data Extraction & Mining (Static/Live Data Streams)

$200

Django

Backend Engineer

Data Scraper

+5

I will conduct web scraping using python | 7+ Yrs Exp

$150

BeautifulSoup

Data Engineer

Data Scraper

+4

I will develop custom python bots and web crawlers | 7+ Yrs Exp

$350/wk