Finetuning Large Language Models (LLMs)Balaj Khalid

I specialize in fine-tuning large language models (LLMs) to optimize them for your specific use case. With extensive hands-on experience, I leverage expert frameworks to ensure high accuracy, efficiency, and usability. I focus on delivering tailored, actionable solutions that align with your business goals, ensuring efficiency and impact every step of the way.

What's included

Codebase with Detailed Comments

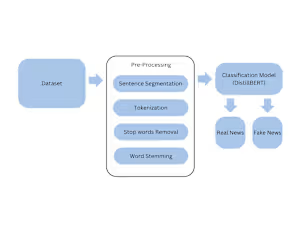

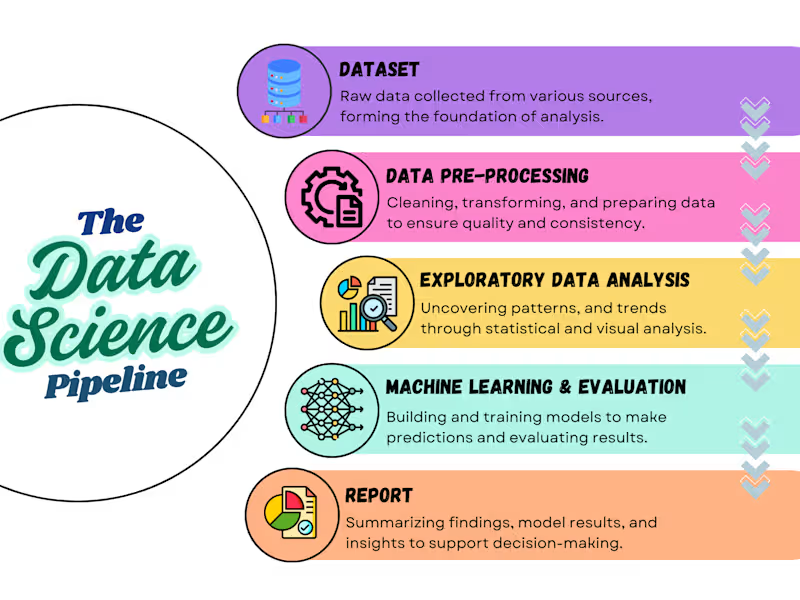

A clean and well-structured repository (e.g., GitHub or GitLab) containing all scripts for data preprocessing, model fine-tuning, and evaluation. The code will include detailed comments explaining each step, from loading and cleaning data to fine tuning models like GPT, Llama, DeepSeek, BERT, and DistilBERT to your unique needs. It will also provide instructions on setting up the environment, running the code, and reproducing the results.

FAQs

I can fine-tune various transformer-based models, including GPT-3, GPT-4, Llama, DistilBERT, and other Hugging Face models, based on your specific requirements.

You can provide a structured dataset (e.g., CSV, JSON, or text files). If you don’t have one, I can assist in data collection and preprocessing.

I can optimize training using techniques like quantization, distillation, and efficient training methods that work on limited computational resources.

Yes, I can guide you through deploying the model using APIs, cloud platforms (AWS, GCP), or local inference setups.

The time depends on the model size, dataset complexity, and required optimizations. A standard fine-tuning job typically takes 5-10 days.

Example work

Balaj's other services

Starting at$100

Duration1 week

Tags

Bert

Hugging Face

Data Analyst

Data Modelling Analyst

Data Scientist

Service provided by

Balaj Khalid Los Angeles, USA

- 1

- Followers

Finetuning Large Language Models (LLMs)Balaj Khalid

Starting at$100

Duration1 week

Tags

Bert

Hugging Face

Data Analyst

Data Modelling Analyst

Data Scientist

I specialize in fine-tuning large language models (LLMs) to optimize them for your specific use case. With extensive hands-on experience, I leverage expert frameworks to ensure high accuracy, efficiency, and usability. I focus on delivering tailored, actionable solutions that align with your business goals, ensuring efficiency and impact every step of the way.

What's included

Codebase with Detailed Comments

A clean and well-structured repository (e.g., GitHub or GitLab) containing all scripts for data preprocessing, model fine-tuning, and evaluation. The code will include detailed comments explaining each step, from loading and cleaning data to fine tuning models like GPT, Llama, DeepSeek, BERT, and DistilBERT to your unique needs. It will also provide instructions on setting up the environment, running the code, and reproducing the results.

FAQs

I can fine-tune various transformer-based models, including GPT-3, GPT-4, Llama, DistilBERT, and other Hugging Face models, based on your specific requirements.

You can provide a structured dataset (e.g., CSV, JSON, or text files). If you don’t have one, I can assist in data collection and preprocessing.

I can optimize training using techniques like quantization, distillation, and efficient training methods that work on limited computational resources.

Yes, I can guide you through deploying the model using APIs, cloud platforms (AWS, GCP), or local inference setups.

The time depends on the model size, dataset complexity, and required optimizations. A standard fine-tuning job typically takes 5-10 days.

Example work

Balaj's other services

$100