Data EngineeringPradip Bhandari

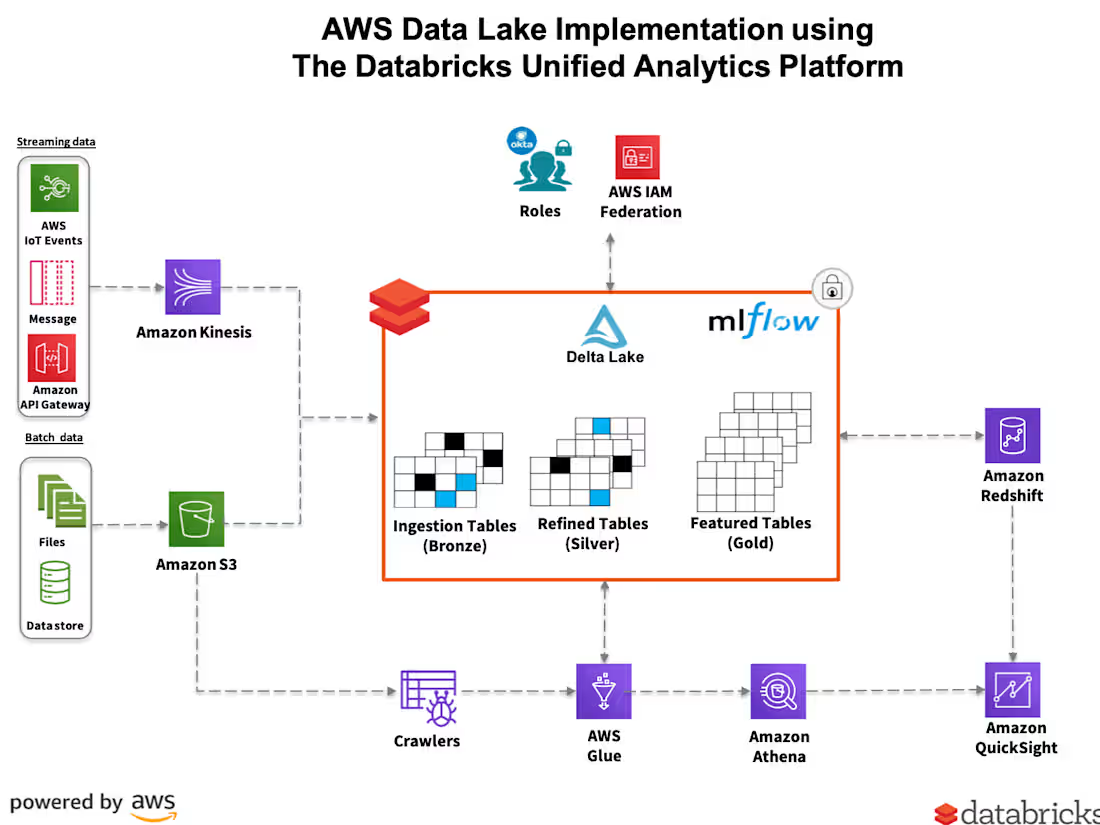

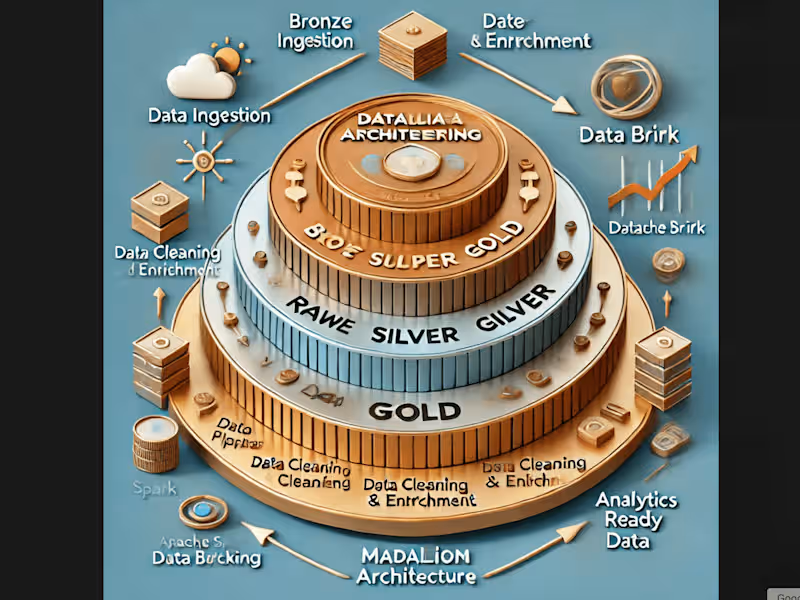

I specialize in simplifying data engineering with tools like Apache Spark, Databricks, and modern cloud services. By leveraging Spark's distributed processing capabilities and Databricks' collaborative environment, I help teams process and analyze large-scale data efficiently. My unique focus is on integrating these platforms seamlessly with cloud providers like AWS, Azure, or GCP, ensuring scalability, optimized costs, and end-to-end automation for big data workflows

Pradip's other services

Starting at$40 /hr

Tags

Apache Spark

SQL

Consultant

Data Engineer

Software Architect

Service provided by

Pradip Bhandari Kathmandu 44600, Nepal

Data EngineeringPradip Bhandari

Starting at$40 /hr

Tags

Apache Spark

SQL

Consultant

Data Engineer

Software Architect

I specialize in simplifying data engineering with tools like Apache Spark, Databricks, and modern cloud services. By leveraging Spark's distributed processing capabilities and Databricks' collaborative environment, I help teams process and analyze large-scale data efficiently. My unique focus is on integrating these platforms seamlessly with cloud providers like AWS, Azure, or GCP, ensuring scalability, optimized costs, and end-to-end automation for big data workflows

Pradip's other services

$40 /hr