Machine Learning Inference Model Development and DeploymentJavier Aquique

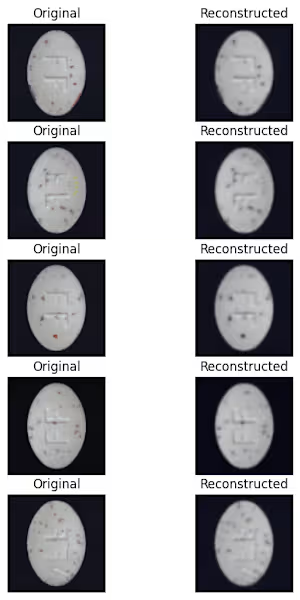

I develop and deploy high-performance machine learning inference models, turning complex data into real-time, actionable predictions. From model optimization to seamless API integration and scalable cloud deployment, I ensure your AI solutions run efficiently and reliably. What sets me apart is my focus on both technical excellence and real-world usability, leveraging my engineering expertise to deliver models that are not just accurate, but also scalable, secure, and ready for production.

Javier's other services

Contact for pricing

Tags

pandas

Python

PyTorch

scikit-learn

TensorFlow

AI Developer

AI Model Developer

ML Engineer

Service provided by

Javier Aquique Alcobendas, Spain

Machine Learning Inference Model Development and DeploymentJavier Aquique

Contact for pricing

Tags

pandas

Python

PyTorch

scikit-learn

TensorFlow

AI Developer

AI Model Developer

ML Engineer

I develop and deploy high-performance machine learning inference models, turning complex data into real-time, actionable predictions. From model optimization to seamless API integration and scalable cloud deployment, I ensure your AI solutions run efficiently and reliably. What sets me apart is my focus on both technical excellence and real-world usability, leveraging my engineering expertise to deliver models that are not just accurate, but also scalable, secure, and ready for production.

Javier's other services

Contact for pricing