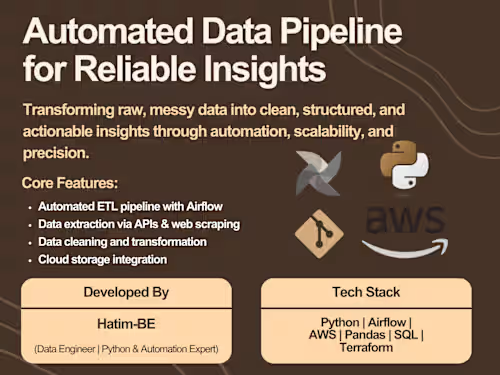

Scalable Data Pipeline for Clean and Reliable Insights

Contact for pricing

About this service

Summary

FAQs

What kinds of data are you capable of working with?

I deal with unstructured, semi-structured, and structured data from databases, web scraping, CSV/Excel files, cloud storage, and APIs.

How do you guarantee the quality and accuracy of data?

To guarantee dependable and consistent results, I use automated cleaning, validation, and transformation processes in addition to extensive testing.

What technologies and tools do you use?

AWS/GCP services, Python, Pandas, PySpark, Airflow, PostgreSQL, and contemporary ETL frameworks that are adapted to the efficiency and scalability requirements of projects.

Is it possible to automate the pipeline for frequent updates?

Yes, I design pipelines to run on schedules or triggers, ensuring real-time or periodic data updates with minimal manual intervention.

Do you provide documentation and support?

Absolutely. Every project comes with detailed documentation, setup instructions, and support for revisions or troubleshooting.

How fast can I expect delivery?

Depending on size and complexity, standard datasets are typically delivered in 3–5 business days with clear communication at every stage.

What's included

Cleaned & Structured Dataset

- A fully processed dataset with accurate, consistent, and well-formatted data. - Delivered in CSV, Parquet, or Excel format. - Includes up to 2 revisions based on client feedback.

Data Pipeline Scripts

- Python scripts or Jupyter notebooks automating data extraction, cleaning, and transformation. - Includes documentation for setup and execution. - Delivered in .py or .ipynb format.

Project Report and Insights

- Summary report detailing data sources, cleaning steps, and key findings. - Recommendations for further analysis or optimization. - Delivered in PDF or Word format.

Deployment Guide / Automation Setup

- Instructions for deploying the pipeline on local or cloud environments (AWS, GCP). - Includes environment setup, dependencies, and scheduling guidance.

Industries