Python/C++/GPT/OpenAI/RAG for AI/ML/LLM/Computer Vision

Contact for pricing

About this service

Summary

Process

What's included

Computer Vision Software Development

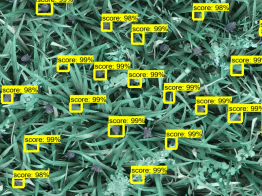

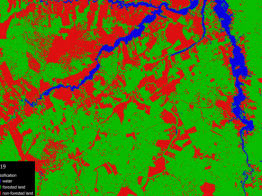

Services: Computer Vision, Image Processing, OpenCV and Deep Learning in Python Deployment on AWS, GCP, Android, iOS, Raspberry Pi, Edge Devices Algorithms: Image Processing Classification Object Detection Segmentation Object Tracking Keypoint Detection Neural Network Deep Learning CNN RCNN RNN GANs YOLOv5 ResNet Libraries & Tools: OpenCV NumPy Tensorflow JS MatplotLib Keras Custom Computer Vision Solutions: Design and development of custom computer vision applications for various industries such as healthcare, retail, manufacturing, and security. Applications include facial recognition, object detection and classification, image segmentation, and automated inspection systems. Image and Video Processing: Advanced image and video processing services to enhance image quality, perform image restoration, and extract meaningful information from visual data. Services include noise reduction, image filtering, edge detection, and motion analysis. Deep Learning Model Development: Building and training deep learning models for specific tasks such as image classification, natural language processing, and predictive analytics. Custom model development from scratch or using transfer learning to leverage pre-trained models for rapid development and deployment. AI-Powered Automation and Optimization: Implementation of AI-powered solutions to automate tasks, optimize workflows, and improve decision-making processes. Examples include automated document processing, predictive maintenance, and resource optimization models. Model Training: Training and fine-tuning models to ensure high accuracy and performance. Technology Stack: Programming Languages: Proficient in Python, with deep knowledge of libraries and frameworks essential for ML and computer vision tasks. Frameworks and Libraries: Deep Learning: TensorFlow, Keras, PyTorch for developing and training deep learning models. Computer Vision: OpenCV, PIL (Python Imaging Library), scikit-image for image processing tasks. Data Science and Analysis: NumPy, Pandas, Matplotlib, Seaborn for data manipulation and visualization. Development Tools: Jupyter Notebook, Google Colab, Anaconda for development environments. Cloud and Deployment: Have used cloud platforms like AWS and Google Cloud for deploying ML models and applications, including the use of container technologies like Docker / Kubernetes for easy deployment.

Natural Language Processing (NLP) Development

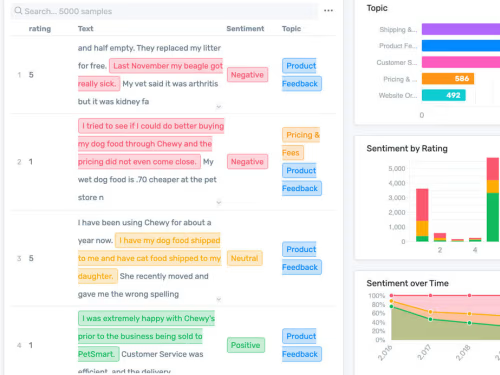

Programming Languages: Python, known for its rich ecosystem of libraries and frameworks for NLP. Libraries and Frameworks: NLP: Natural Language Toolkit (NLTK), spaCy for natural language processing tasks, including tokenization, stemming, lemmatization, and parsing. Deep Learning for NLP: Transformers library (providing access to models like BERT, GPT, T5), TensorFlow, and PyTorch for building and training state-of-the-art NLP models. Text Vectorization: Gensim for topic modeling and document similarity analysis, and Scikit-learn for feature extraction and text classification. NLP Services I Offer: Text Analysis and Sentiment Analysis: Development of models to analyze text data from various sources (social media, reviews, customer feedback) to determine sentiment, trends, and customer opinions. Chatbots and Conversational Agents: Design and implementation of intelligent chatbots and conversational agents for customer support, e-commerce, and interactive user experiences. Named Entity Recognition (NER): Development of models to identify and categorize key information in text such as names, organizations, locations, dates, and other specifics. Text Classification and Categorization: Automated classification of text into predefined categories, useful for content filtering, organization, and recommendation systems. Machine Translation: Implementation of machine translation solutions to automatically translate text between languages with high accuracy. Natural Language Generation (NLG): Generating human-like text from structured data, useful for automated report generation, content creation, and summarization tasks. Topic Modeling and Keyword Extraction: Extracting topics and keywords from large volumes of text to uncover hidden themes and improve content discoverability. Speech Recognition and Generation: Development of speech processing solutions to convert speech to text and vice versa, enabling voice-activated applications and services. Development and Collaboration Tools: IDEs and Notebooks: Jupyter Notebook, Visual Studio Code, and PyCharm for code development and testing. Version Control: Git and GitHub for source code management and collaboration. Deployment and Cloud Platforms: Experienced in deploying NLP models and applications on cloud platforms such as AWS and Google Cloud, leveraging services like AWS Lambda, Google Cloud Functions, and Azure Functions for serverless deployments. Experience with Docker for containerization and Kubernetes for orchestration to facilitate scalable and efficient deployment of NLP services.

Example projects

Recommendations

(5.0)

Client • Jul 13, 2024

Recommended

Christine is the best contracted software engineer I’ve ever worked with in the last 10 years. Her effort, transparency, and efficiency is far beyond many I’ve worked with before. Would absolutely work with her again and recommend to work with her.

Skills and tools

ML Engineer

AI Model Developer

AI Developer

Python

PyTorch

scikit-learn

TensorFlow

Variational Autoencoders (VAEs)