Consultant for Data EngineeringMujtaba Hasan

As a Data Engineer I understand the growing need for managing and storing data for making better decisions. Designing robust pipelines is necessary for timely availability of the data to make the data as much meaningful as possible.

Grasping knowledge of any tool is not hard for me, in my opinion tools are just the means to make you reach your goal easily but the underlying concepts of every tool are the same.

What's included

Project Scope and Locking

A concise document for locking the scope of the project outlining the details, requirements, audience, objective furthermore it will also highlight the initial process that the client and I will be deciding upon. It will also help for referencing in between the project after it has started.

Data Gathering

This step will heavily involve the client and me for discussing where the data will be coming from, how many sources are involved, what is the frequency of the incoming data and volume. It might also involve some preprocessing before moving to the next step.

Designing the pipeline (ETL/ELT)

A scalable, robust pipeline designing that extracts, processes and loads the data. The type of pipeline design (ELT or ETL) will be decided after reviewing the requirements and needs. This will automate the collection and required transformation of data.

Data Warehouse/Lake

Designing a structured data warehouse or lake depends on the data needs mostly or sometimes on clients preference. This will be developed using technologies like Google BigQuery, Amazon Redshift or other similar tools or setting up a custom Data warehouse/lake.

Data Validation and Testing

This is a very important step that encompasses certain checks for data validation including data sanity check, A/B testing etc. It will ensure completeness, integrity, accuracy and consistency of data. This step might be repeated to ensure correctness of data.

Automation and scheduling

This will involve automation of all the workflows. Identifying dependent events, setting up the right time for processing and ingestion of the data so data is readily available at the required frequency (daily, weekly ,monthly) using tools like Airflow, Aws Event Bridge, Linux etc.

Mujtaba's other services

Contact for pricing

Tags

AWS

Python

SQL

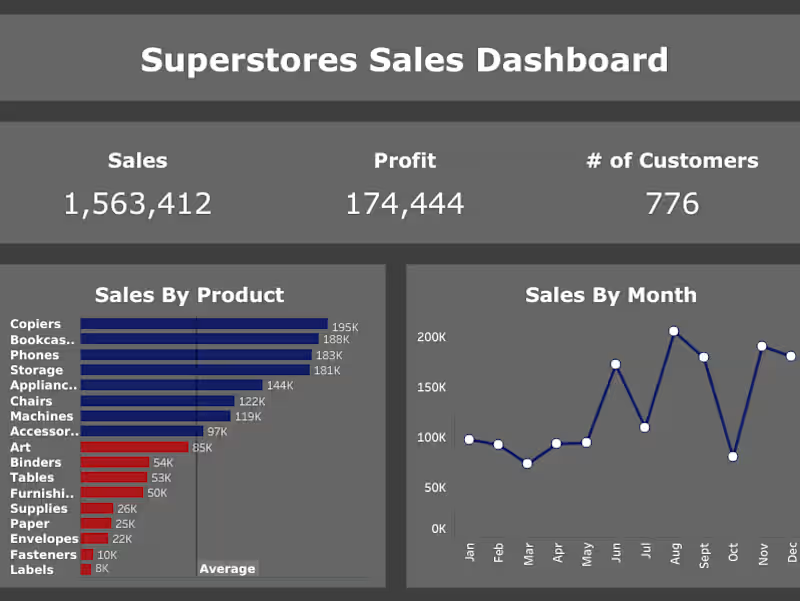

Tableau

Data Analyst

Data Engineer

Service provided by

Mujtaba Hasan Karachi, Pakistan

Consultant for Data EngineeringMujtaba Hasan

Contact for pricing

Tags

AWS

Python

SQL

Tableau

Data Analyst

Data Engineer

As a Data Engineer I understand the growing need for managing and storing data for making better decisions. Designing robust pipelines is necessary for timely availability of the data to make the data as much meaningful as possible.

Grasping knowledge of any tool is not hard for me, in my opinion tools are just the means to make you reach your goal easily but the underlying concepts of every tool are the same.

What's included

Project Scope and Locking

A concise document for locking the scope of the project outlining the details, requirements, audience, objective furthermore it will also highlight the initial process that the client and I will be deciding upon. It will also help for referencing in between the project after it has started.

Data Gathering

This step will heavily involve the client and me for discussing where the data will be coming from, how many sources are involved, what is the frequency of the incoming data and volume. It might also involve some preprocessing before moving to the next step.

Designing the pipeline (ETL/ELT)

A scalable, robust pipeline designing that extracts, processes and loads the data. The type of pipeline design (ELT or ETL) will be decided after reviewing the requirements and needs. This will automate the collection and required transformation of data.

Data Warehouse/Lake

Designing a structured data warehouse or lake depends on the data needs mostly or sometimes on clients preference. This will be developed using technologies like Google BigQuery, Amazon Redshift or other similar tools or setting up a custom Data warehouse/lake.

Data Validation and Testing

This is a very important step that encompasses certain checks for data validation including data sanity check, A/B testing etc. It will ensure completeness, integrity, accuracy and consistency of data. This step might be repeated to ensure correctness of data.

Automation and scheduling

This will involve automation of all the workflows. Identifying dependent events, setting up the right time for processing and ingestion of the data so data is readily available at the required frequency (daily, weekly ,monthly) using tools like Airflow, Aws Event Bridge, Linux etc.

Mujtaba's other services

Contact for pricing